Authors:

(1) Yinwei Dai, Princeton University (Equal contributions);

(2) Rui Pan, Princeton University (Equal contributions);

(3) Anand Iyer, Georgia Institute of Technology;

(4) Ravi Netravali, Georgia Institute of Technology.

Table of Links

2 Background and Motivation and 2.1 Model Serving Platforms

3.1 Preparing Models with Early Exits

3.2 Accuracy-Aware Threshold Tuning

3.3 Latency-Focused Ramp Adjustments

5 Evaluation and 5.1 Methodology

5.3 Comparison with Existing EE Strategies

7 Conclusion, References, Appendix

Abstract

Machine learning (ML) inference platforms are tasked with balancing two competing goals: ensuring high throughput given many requests, and delivering low-latency responses to support interactive applications. Unfortunately, existing platform knobs (e.g., batch sizes) fail to ease this fundamental tension, and instead only enable users to harshly trade off one property for the other. This paper explores an alternate strategy to taming throughput-latency tradeoffs by changing the granularity at which inference is performed. We present Apparate, a system that automatically applies and manages early exits (EEs) in ML models, whereby certain inputs can exit with results at intermediate layers. To cope with the time-varying overhead and accuracy challenges that EEs bring, Apparate repurposes exits to provide continual feedback that powers several novel runtime monitoring and adaptation strategies. Apparate lowers median response latencies by 40.5-91.5% and 10.0-24.2% for diverse CV and NLP workloads, respectively, without affecting throughputs or violating tight accuracy constraints.

1 INTRODUCTION

Machine Learning (ML) inference has become a staple for request handling in interactive applications such as traffic analytics, chatbots, and web services [24, 26, 33, 40, 55]. To manage these ever-popular workloads, applications typically employ serving platforms [4, 5, 17, 22, 39, 44] that ingest requests and schedule inference tasks with pre-trained models across large clusters of compute resources (typically GPUs). The overarching goals of serving platforms are to deliver sufficiently high throughput to cope with large request volumes – upwards of trillions of requests per day [37] – while respecting the service level objectives (SLOs) that applications specify for response times (often 10s to 100s of ms).

Unfortunately, in balancing these goals, serving platforms face a challenging tradeoff (§2.1): requests must be batched for high resource efficiency (and thus throughput), but larger batch sizes inflate queuing delays (and thus per-request latencies). Existing platforms navigate this latency-throughput tension by factoring only tail latencies into batching decisions and selecting max batch sizes that avoid SLO violations. Yet, this trivializes the latency sensitivity of many interactive applications whose metrics of interest (e.g., user retention [20,54], safety in autonomous vehicles [52]) are also influenced by how far below SLOs their response times fall.

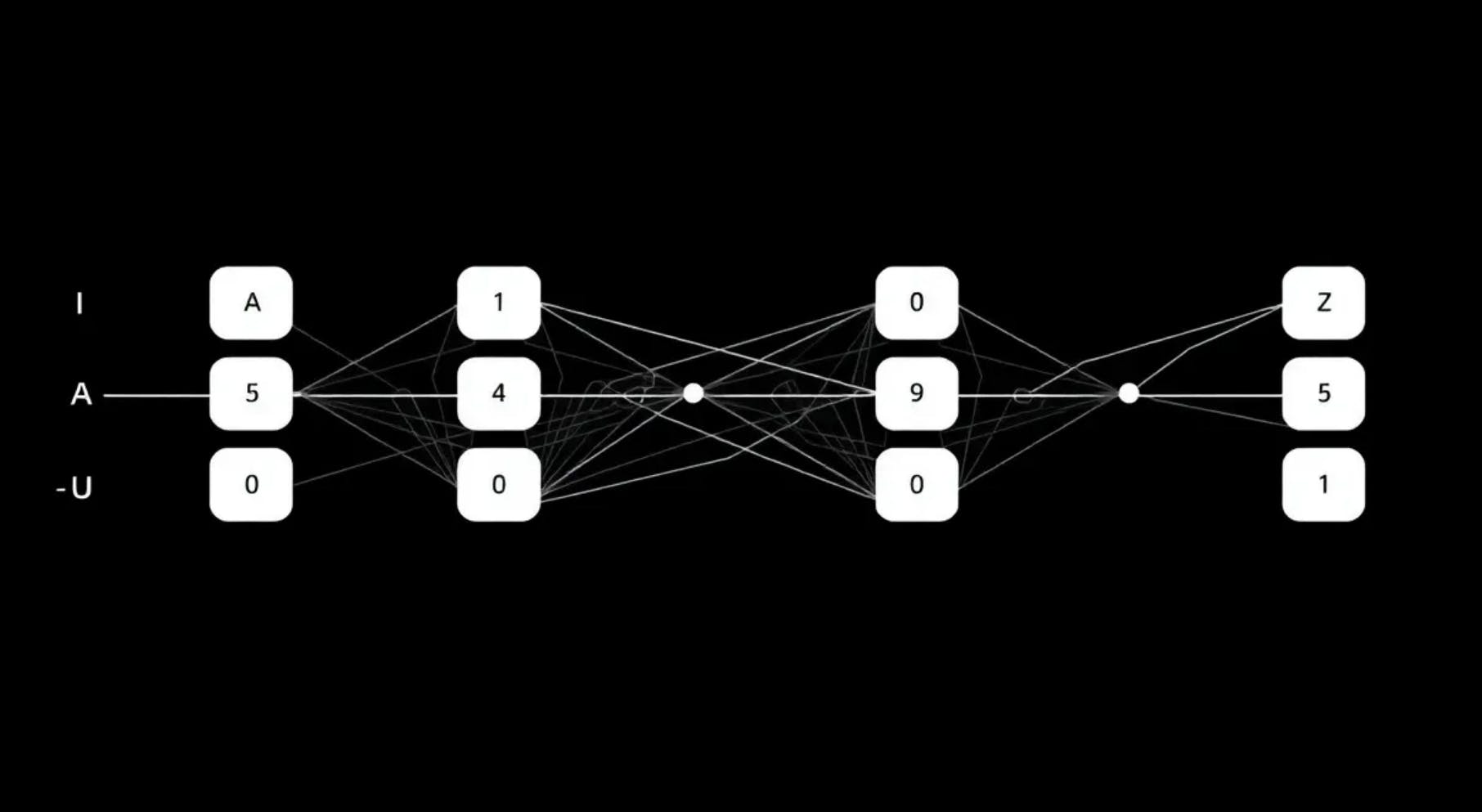

This paper explores the role that early exits (EEs) – an adaptation mechanism that has garnered substantial ML research interest in recent years [28, 36, 53, 56–58, 64] – can play in resolving this tension for existing serving platforms. With EEs, intermediate model layers are augmented with ramps of computation that aim to predict final model responses. Ramp predictions with sufficiently high confidence (subject to a threshold) exit the model, foregoing downstream layers and bringing corresponding savings in both compute and latency. The intuition is that models are often overparameterized (especially given recent model growth [31, 32, 48]), and certain ‘easy’ inputs may not require complete model processing for accurate results. Importantly, unlike existing platform knobs (e.g., batch size) that simply walk the steep latency-throughput tradeoff curve, EEs rethink the granularity of inference on a per-input basis. This, in turn, provides a path towards lowering request latencies without harming platform throughputs. Indeed, across different CV and NLP workloads, we find that optimal use of EEs brings 24.8-94.0% improvement in median latencies for the same accuracy and throughput.

Despite these potential benefits, EEs are plagued with practical challenges that have limited their impact to date (§2.3). The primary issue is that EE proposals have solely come in the context of specific model architectures that impose fixed ramp designs and locations [53, 57]. The lack of guidance for integrating EEs into arbitrary models is limiting, especially given the ever-growing number of model offerings in the marketplace today. Worse, even existing proposals lack any policy for runtime adaptation of EE configurations, i.e., the set of active ramps and their thresholds. Such adaptation is crucial since dynamic workload characteristics govern the efficacy of each ramp in terms of exiting capabilities and added overheads (to non-exiting inputs); accordingly, failure to continually adapt configurations can result in unacceptable accuracy drops of 8.3-23.9% for our workloads. However, devising adaptation policies is difficult: the space of configurations is massive, and it is unclear how to obtain a signal for accuracy monitoring once an input exits.

We present Apparate, the first system that automatically injects and manages EEs for serving with a wide range of models. Our main insight is that the above challenges are not fundamental to EEs, and instead are a byproduct of what we are trying to get out of them. Specifically, adaptation challenges largely stem from halting execution for an input upon an exit, which leaves uncertainty in the ‘correct’ response (as per the non-EE model). Instead, Apparate uses EEs only to deliver latency reductions; results for successful exits are immediately released, but all inputs continue to the end of the model. The key is in leveraging the (now) redundant computations to enable continual and efficient adaptation.

Guided by this philosophy, Apparate runs directly atop existing serving platforms and begins by automatically converting registered models into EE variants. Apparate’s EE preparation strategy must strike a balance between supporting fine-grained runtime adaptation without burdening those time-sensitive algorithms with (likely) unfruitful options. To do so without developer effort, Apparate leans on guidance from the original model design, crafting ramp locations and architectures based on downstream model computations and data flow for intermediates around the model. Original model layers (and weights) are unchanged, and added ramps are trained in parallel (for efficiency), but in a manner that preserves their independence from other ramps.

Once deployed by serving platforms, Apparate continually monitors EE operation in GPUs, tracking computations and latency effects of each ramp, as well as outputs of the original model (for accuracy ground truth). To tackle the massive space of configuration options, Apparate judiciously decouples tunable EE knobs: thresholds for existing ramps are frequently and quickly tuned to ensure consistently high accuracy, while costlier changes to the set of active ramps occur only periodically as a means for latency optimization. For both control loops, Apparate leverages several fundamental properties of EEs to accelerate the tuning process. For instance, the monotonic nature of accuracy drops (and increases in latency savings) for higher thresholds motivates Apparate’s greedy algorithm for threshold tuning which runs up to 3 orders of magnitude faster than grid search while sacrificing only 0-3.8% of the potential wins.

We evaluated Apparate across a variety of recent CV and NLP models (ranging from compressed to large language models), multiple workloads in each domain, and several serving platforms (TensorFlow-Serving [39], Clockwork [22]). Compared to serving without EEs, Apparate improves 25th percentile and median latencies by 70.2-94.2% and 40.5-91.5% for CV, and 16.0-37.3% and 10.0-24.2% for NLP, while imposing negligible impact on platform throughput. Importantly, unlike existing EE proposals that yield accuracy dips of 8.3-23.9%, we find that Apparate’s adaptation strategies always met user-defined accuracy constraints.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.