What Developers Ask ChatGPT When Writing Code

13 Nov 2025

Developers are using ChatGPT to code, debug, and review collaboratively. Here’s what GitHub conversations reveal about AI-assisted teamwork.

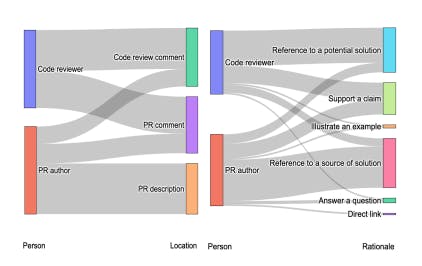

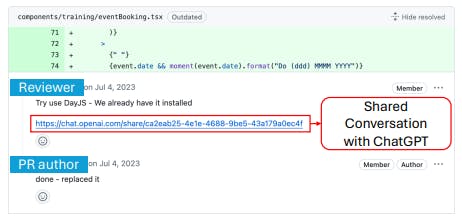

How Developers Use ChatGPT in GitHub Pull Requests and Issues

13 Nov 2025

How developers use ChatGPT in GitHub issues and pull requests—and what their shared conversations reveal about AI-assisted coding.

Foundation Models Are Reshaping How Developers Code Together

13 Nov 2025

Developers are sharing ChatGPT chats in open-source projects. Here’s what it reveals about AI-powered collaboration and benchmark design.

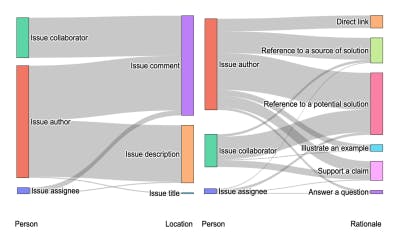

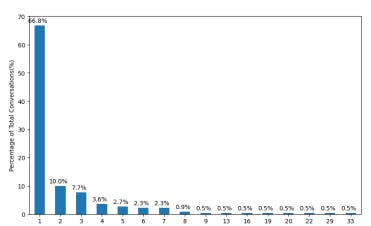

Mapping Why and How Developers Share AI-Generated Conversations on GitHub

13 Nov 2025

Developers are sharing ChatGPT chats in GitHub. This study reveals why, where, and how they do it—and what it means for open-source collaboration.

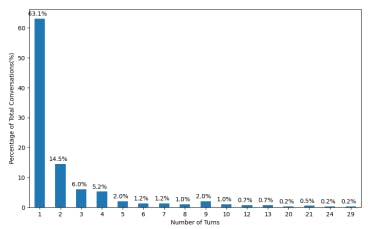

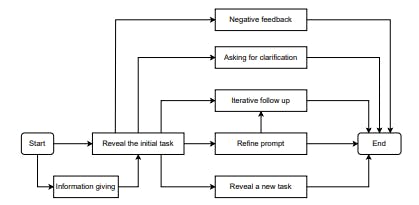

Analyzing the Flow of Developer Prompts in ChatGPT Conversations

12 Nov 2025

How developers interact with ChatGPT across multiple turns—analyzing prompts, feedback, and flow patterns from 645 developer conversations.

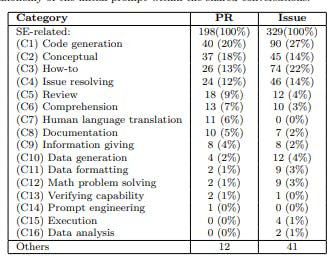

What Do Developers Ask ChatGPT the Most?

12 Nov 2025

Discover what 580 GitHub conversations reveal about how developers use ChatGPT — from code generation to debugging and documentation.

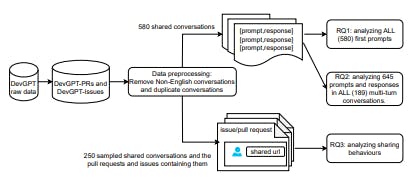

Building the DevGPT Dataset for Developer–ChatGPT Studies

12 Nov 2025

How researchers collected and cleaned 17K developer–ChatGPT conversations from GitHub to explore AI’s role in software development.

Lessons on Developer–AI Collaboration From 580 GitHub Conversations

12 Nov 2025

Developers are using ChatGPT to code, debug, and collaborate. A new study reveals how shared AI chats are reshaping teamwork on GitHub.

Comparing Efficiency Strategies for LLM Deployment and Summarizing PowerInfer‑2’s Impact

3 Nov 2025

This article situates PowerInfer‑2 among other frameworks that improve LLM efficiency through compression, pruning, and speculative decoding.