Table of Links

2 Background and 2.1 Transformer-Based Large Language Models

2.2 LLM Service & Autoregressive Generation

2.3 Batching Techniques for LLMs

3 Memory Challenges in LLM Serving

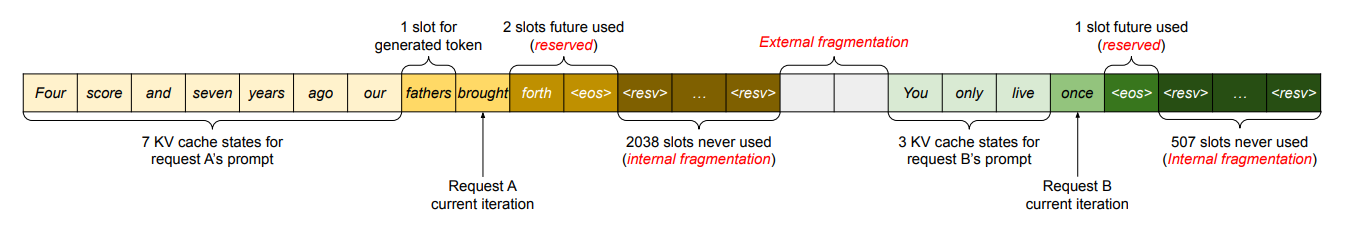

3.1 Memory Management in Existing Systems

4 Method and 4.1 PagedAttention

4.3 Decoding with PagedAttention and vLLM

4.4 Application to Other Decoding Scenarios

6 Evaluation and 6.1 Experimental Setup

6.3 Parallel Sampling and Beam Search

10 Conclusion, Acknowledgement and References

2.3 Batching Techniques for LLMs

The compute utilization in serving LLMs can be improved by batching multiple requests. Because the requests share the same model weights, the overhead of moving weights is amortized across the requests in a batch, and can be overwhelmed by the computational overhead when the batch size is sufficiently large. However, batching the requests to an LLM service is non-trivial for two reasons.

First, the requests may arrive at different times. A naive batching strategy would either make earlier requests wait for later ones or delay the incoming requests until earlier ones finish, leading to significant queueing delays. Second, the requests may have vastly different input and output lengths (Fig. 11). A straightforward batching technique would pad the inputs and outputs of the requests to equalize their lengths, wasting GPU computation and memory.

To address this problem, fine-grained batching mechanisms, such as cellular batching [16] and iteration-level scheduling [60], have been proposed. Unlike traditional methods that work at the request level, these techniques operate at the iteration level. After each iteration, completed requests are removed from the batch, and new ones are added.

Therefore, a new request can be processed after waiting for a single iteration, not waiting for the entire batch to complete. Moreover, with special GPU kernels, these techniques eliminate the need to pad the inputs and outputs. By reducing the queueing delay and the inefficiencies from padding, the fine-grained batching mechanisms significantly increase the throughput of LLM serving.

This paper is available on arxiv under CC BY 4.0 DEED license.

Authors:

(1) Woosuk Kwon, UC Berkeley with Equal contribution;

(2) Zhuohan Li, UC Berkeley with Equal contribution;

(3) Siyuan Zhuang, UC Berkeley;

(4) Ying Sheng, UC Berkeley and Stanford University;

(5) Lianmin Zheng, UC Berkeley;

(6) Cody Hao Yu, Independent Researcher;

(7) Cody Hao Yu, Independent Researcher;

(8) Joseph E. Gonzalez, UC Berkeley;

(9) Hao Zhang, UC San Diego;

(10) Ion Stoica, UC Berkeley.