This paper is available on Arxiv under CC 4.0 license.

Authors:

(1) Seth P. Benson, Carnegie Mellon University (e-mail: spbenson@andrew.cmu.edu);

(2) Iain J. Cruickshank, United States Military Academy (e-mail: iain.cruickshank@westpoint.edu)

Table of Links

V. DISCUSSION

This research yielded several significant results. Firstly, our findings suggest that it is feasible to computationally extract media bias characterizations with minimal human bias assessments. The empirical examination of news programs from three cable news networks, each with different perceived biases, generally confirmed broadly held assumptions about these programs. The biases exhibited by these programs remained consistent over time and strongly aligned with their respective networks.

However, the key contribution of our work is not merely the affirmation of common media pundit observations but the fact that we reached this conclusion through an automated machine-learning model.

This model required minimal human input and did not necessitate a priori bias specifications. While our findings still necessitate human interpretation of the resulting clusters, they are largely free from subjective human assessments and do not require media expertise for operation.

The objective nature of this model demonstrates its potential to replace current media evaluation methods, which still heavily rely on human interpretation and assumptions. It also provides a tool for exploring realms where biases are not yet known, such as social media user biases. For political science researchers, this paper offers a novel framework for analyzing media bias and a confirmation of many implicit assumptions about biases in cable news.

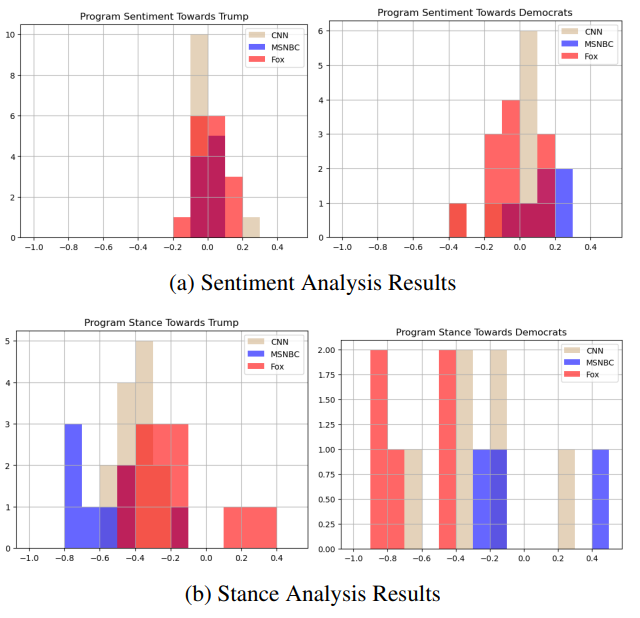

Another significant finding of this research is the application of stance analysis methods to cable news transcript text. Our results indicated that stance analysis, employing task-based prompting with GPT-4, was far more effective than sentiment analysis in characterizing programs’ attitudes towards topics.

Our stance analysis method generated more variance in programs’ views towards topics and produced variance that better aligned with real-world expectations of programs’ viewpoints. This method not only offers an improvement for understanding cable news transcripts, but it also demonstrates a more advanced way to identify writing style bias in media generally.

However, the model presented in this paper does have certain limitations. It identifies key topics within transcripts as the most frequently used named entities, which may overlook certain types of topics, such as policy discussions. Furthermore, while stance detection was a significant improvement over sentiment analysis, the stance labeling of sentences within transcripts was not always accurate. The extent of these inaccuracies remains undetermined due to the absence of a labeled dataset for the cable news transcripts we utilized.

Nevertheless, it appears that certain specific subsets, like short sentences lacking key context, resulted in a higher amount of incorrect labeling. The improvement of stance detection, in both a zero-shot setting like this and on spokenword text that comprises news transcripts, remains a crucial area for future research.

This paper is available on Arxiv under CC 4.0 license.