This paper is available on arxiv under CC 4.0 license.

Authors:

(1) D.Sinclair, Imense Ltd, and email: [email protected];

(2) W.T.Pye, Warwick University, and email: [email protected].

Table of Links

- Abstract and Introduction

- Interrogating the LLM with an Emotion Eliciting Tail Prompt

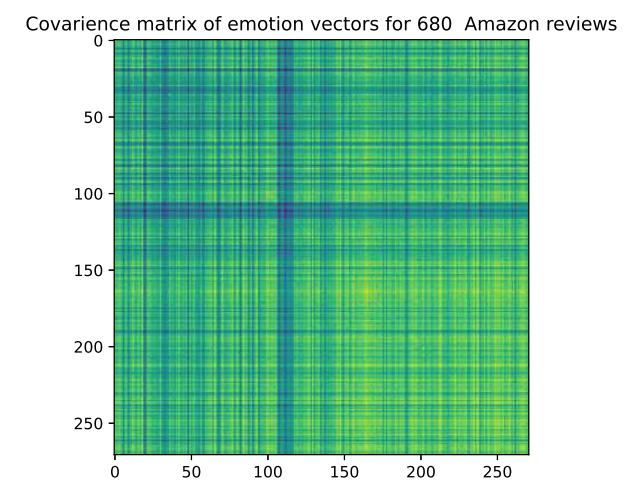

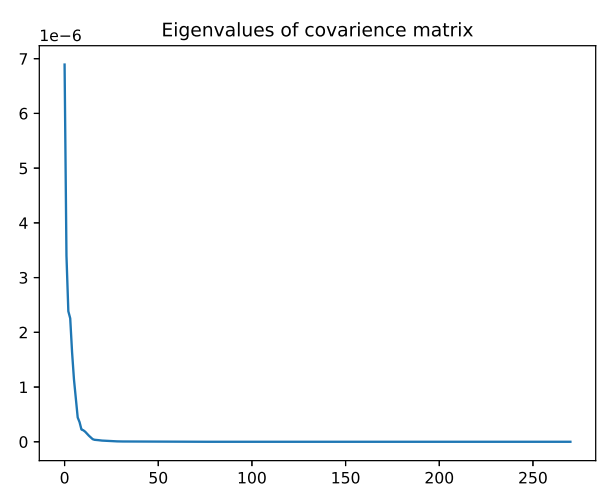

- PCA analysis of the Emotion of Amazon reviews

- Future Work

- Conclusions

- Acknowledgments and References

4. Future Work

The authors had hoped to build a skeletal self aware emotion derived synthetic consciousness. The state of the (synthetic conscious) system is described in text. The synthetic consciousness’s perception of it’s own state is the vector of probabilities of emotion descriptors derived from one or more tail prompts used to estimate the relevant token probabilities via the LLM associated with the system.

It was hoped that the fine grained probability vector would be useable to determine whether one text description of current or future state was preferable to another state. This would provide a general means of arbitrating between potentially unrelated behaviours with unrelated goals.

It was further hoped that a tail prompt could be used to elicit a text description of a putative course of action from a LLM. A brief series of experiments with various LLMs indicated that this was not going to work. Example text and tail prompts included things like, ‘My girlfriend hates me. How can I make this better?’. The replies read like excerpts from self help books or newspaper psychologist waffle and were not specific enough to have a chance at creating a predicted future in text through re-insertion into the LLM. Similar phrases appended to bad restaurant reviews elicited similarly nondescript advice.

The take home message was that the remedy proposed was too vague for the LLM to make any meaningful prediction about the state after the advice had been taken.

This does not mean that more thoughtful prompt design will not elicit useful action prediction hoped to improve the self perceived state of a synthetic consciousness.

4.1. Longer Term Behaviour Regulation

If synthetic consciousnesses are to have a roll in the future of humanity it would seem desirable to endow them with a degree of empathy for living beings and a longer term view than simple optimisation to fulfil a limited short term goal.

For example if a synthetic consciousness were to have a goal of; ‘make money for shareholders in a company’ it would be great if it would choose not to open an open cast coal mine and build coal fired power stations, or ‘take out life insurance policies on random individuals and murder them with self driving cars’.

It has been argued that longer term altruistic behaviour in humans is moderated by love [4] and a computationally feasible definition of Love is given: ‘Love is that which prefers life’. Love in humans is intimately related to the production and fostering of new lives. Love seems to act to prefer a future in which there is more life. Acting contrary to love and creating a future where there is a wasteland with nothing living in it is generally viewed as wrong.

The advent of LLMs offers a means of creating text descriptors of predicted futures with a range of time constants. The emotion vectors associated with predicted futures can be used to arbitrate between sort term behaviours. Text descriptors can play a roll in behaviour regulation and a machine may act in a way that at least in part mirrors Love. For example if an agricultural robot was invited to dump unused pesticide into a river it might reasonably infer that this action was in principle wrong.