This paper is available on arxiv under CC BY-NC-SA 4.0 DEED license.

Authors:

(1) Ke Hong, Tsinghua University & Infinigence-AI;

(2) Guohao Dai, Shanghai Jiao Tong University & Infinigence-AI;

(3) Jiaming Xu, Shanghai Jiao Tong University & Infinigence-AI;

(4) Qiuli Mao, Tsinghua University & Infinigence-AI;

(5) Xiuhong Li, Peking University;

(6) Jun Liu, Shanghai Jiao Tong University & Infinigence-AI;

(7) Kangdi Chen, Infinigence-AI;

(8) Yuhan Dong, Tsinghua University;

(9) Yu Wang, Tsinghua University.

Table of Links

- Abstract & Introduction

- Backgrounds

- Asynchronized Softmax with Unified Maximum Value

- Flat GEMM Optimization with Double Buffering

- Heuristic Dataflow with Hardware Resource Adaption

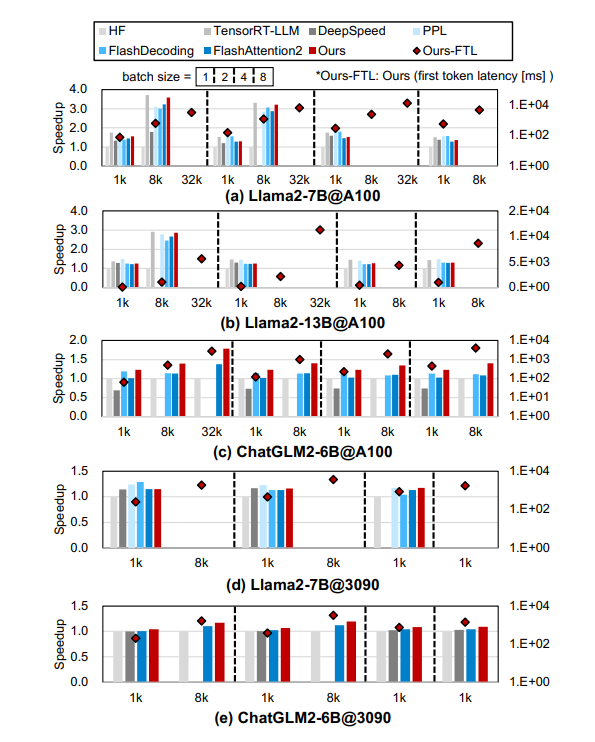

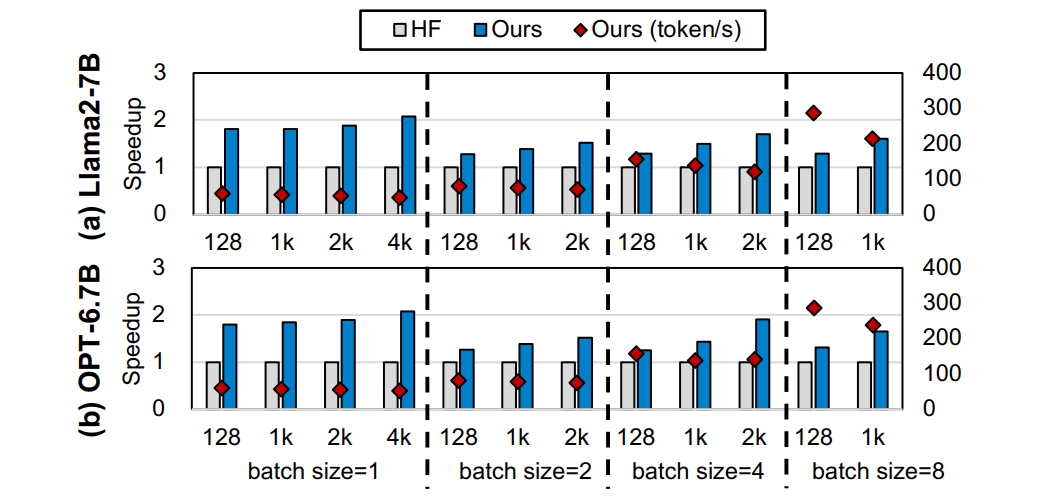

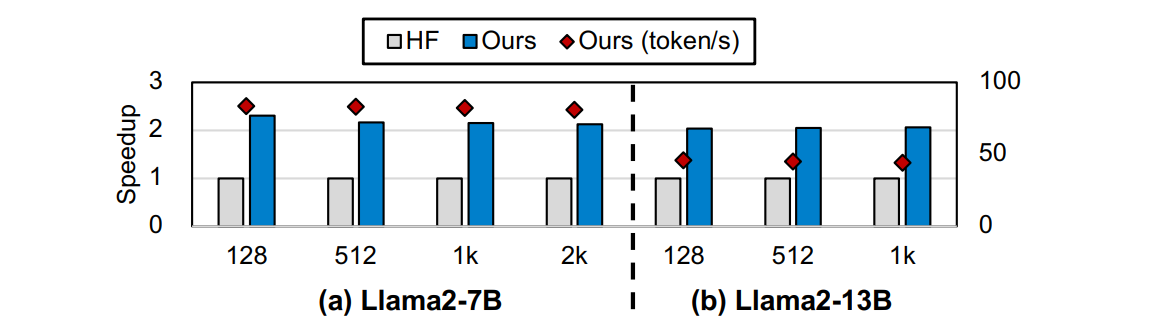

- Evaluation

- Related Works

- Conclusion & References

7 Related Works

Large language model inference acceleration has gained significant attention in recent research, with several notable approaches and techniques emerging in the field. DeepSpeed [9] is a comprehensive engine that optimizes both the

training and inference phases for LLMs. It achieves robust inference performance through kernel fusion and efficient GPU memory management, with a particular focus on optimizing memory usage for KVcache. vLLM [11] improves GPU memory utilization by efficient memory management techniques and the PageAttention method, leading to increased maximum batch sizes and elevating the upper limit of inference performance. FlashAttention [18, 19] optimizes the self-attention computation process during the prefill phase through improved parallelism and workload distribution. FlashDecoding [13] is an extension of FlashAttention and enhances the parallelism through spliting K and V , supporting efficient self-attention computation for long sequence during the decode phase. FasterTransformer [33] and OpenPPL [12] implement large model inference engines using C++ to reduce overhead resulting from kernels scheduling, compared to Python implementations. They also employ memory management techniques and kernel fusion to achieve efficient LLM inference. TensorRT-LLM [14] is built upon the TensorRT [38] and the FasterTransformer

[33] engine (C++) and incorporates cutting-edge open-source technologies such as FlashAttention [18, 19]. Additionally, it enhances its ease of use by providing the Python API.