Table of Links

2 Background & Problem Statement

2.1 How can we use MLLMs for Diffusion Synthesis that Synergizes both sides?

3.1 End-to-End Interleaved generative Pretraining (I-GPT)

4 Experiments and 4.1 Multimodal Comprehension

4.2 Text-Conditional Image Synthesis

4.3 Multimodal Joint Creation & Comprehension

5 Discussions

5.1 Synergy between creation & Comprehension?

5. 2 What is learned by DreamLLM?

B Additional Qualitative Examples

E Limitations, Failure Cases & Future Works

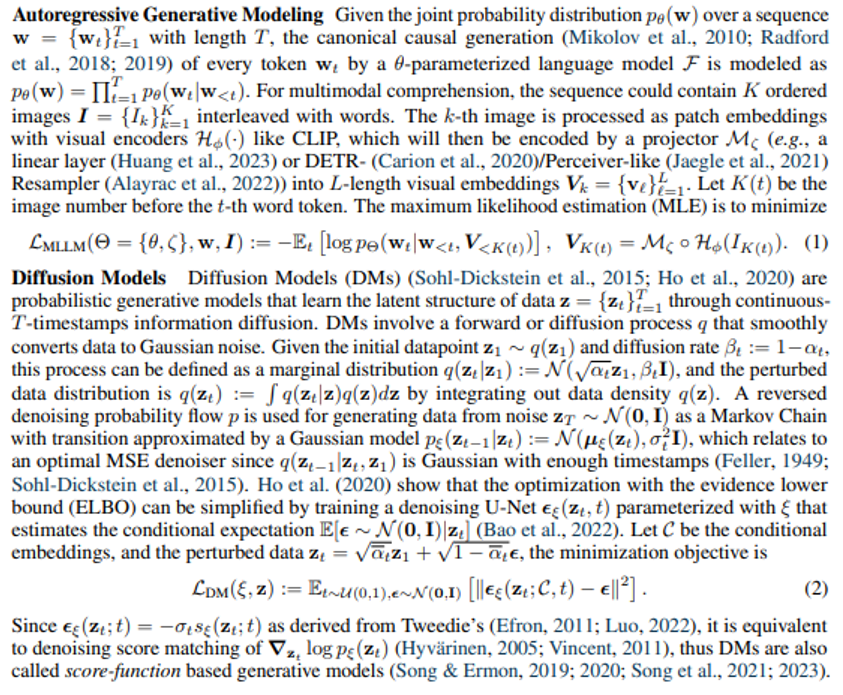

2 BACKGROUND & PROBLEM STATEMENT

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.

Authors:

(1) Runpei Dong, Xi’an Jiaotong University and Internship at MEGVII;

(2) Chunrui Han, MEGVII Technology;

(3) Yuang Peng, Tsinghua University and Internship at MEGVII;

(4) Zekun Qi, Xi’an Jiaotong University and Internship at MEGVII;

(5) Zheng Ge, MEGVII Technology;

(6) Jinrong Yang, HUST and Internship at MEGVII;

(7) Liang Zhao, MEGVII Technology;

(8) Jianjian Sun, MEGVII Technology;

(9) Hongyu Zhou, MEGVII Technology;

(10) Haoran Wei, MEGVII Technology;

(11) Xiangwen Kong, MEGVII Technology;

(12) Xiangyu Zhang, MEGVII Technology and a Project leader;

(13) Kaisheng Ma, Tsinghua University and a Corresponding author;

(14) Li Yi, Tsinghua University, a Corresponding authors and Project leader.