Authors:

(1) Rafael Rafailo, Stanford University and Equal contribution; more junior authors listed earlier;

(2) Archit Sharma, Stanford University and Equal contribution; more junior authors listed earlier;

(3) Eric Mitchel, Stanford University and Equal contribution; more junior authors listed earlier;

(4) Stefano Ermon, CZ Biohub;

(5) Christopher D. Manning, Stanford University;

(6) Chelsea Finn, Stanford University.

Table of Links

4 Direct Preference Optimization

7 Discussion, Acknowledgements, and References

A Mathematical Derivations

A.1 Deriving the Optimum of the KL-Constrained Reward Maximization Objective

A.2 Deriving the DPO Objective Under the Bradley-Terry Model

A.3 Deriving the DPO Objective Under the Plackett-Luce Model

A.4 Deriving the Gradient of the DPO Objective and A.5 Proof of Lemma 1 and 2

B DPO Implementation Details and Hyperparameters

C Further Details on the Experimental Set-Up and C.1 IMDb Sentiment Experiment and Baseline Details

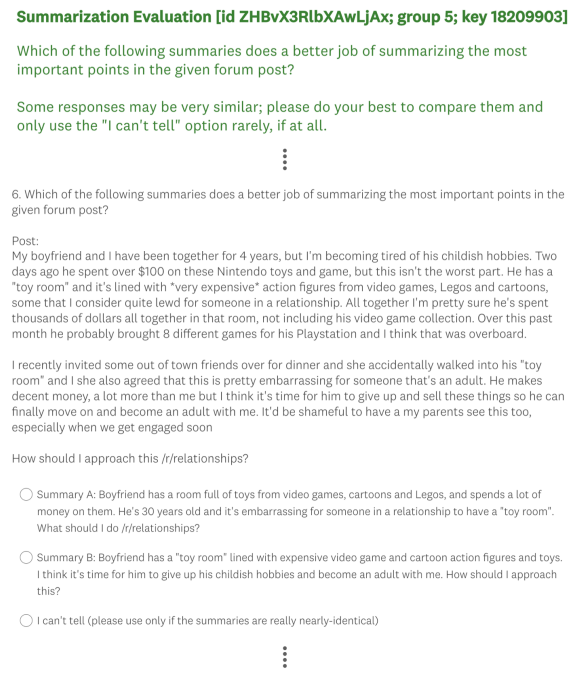

C.2 GPT-4 prompts for computing summarization and dialogue win rates

D Additional Empirical Results

D.1 Performance of Best of N baseline for Various N and D.2 Sample Responses and GPT-4 Judgments

D.3 Human study details

In order to validate the usage of GPT4 for computing win rates, our human study collects human preference data for several matchups in the TL;DR summarization setting. We select three different algorithmic matchups, evaluating DPO (temp. 0.25), SFT (temp. 0.25), and PPO (temp 1.0) compared to the reference algorithm PPO (temp 0.). By selecting matchups for three unique algorithms as well as algorithms with a wide range of win rates vs the reference, we capture the similarity of human and GPT-4 win rates across the response quality spectrum. We sample 150 random comparisons of DPO vs PPO-0 and 100 random comparisons PPO-1 vs PPO-0, assigning two humans to each comparison, producing 275 judgments for DPO-PPO[7] and 200 judgments for PPO-PPO. We sample 125 SFT comparisons, assigning a single human to each. We ignore judgments that humans labeled as ties (which amount to only about 1% of judgments), and measure the raw agreement percentage between human A and human B (for comparisons where we have two human annotators, i.e., not SFT) as well as between each human and GPT-4.

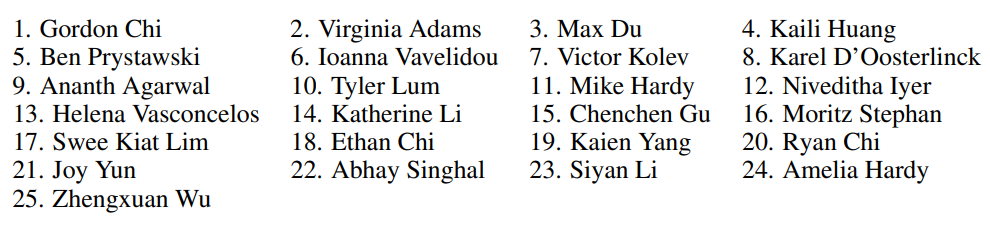

Participants. We have 25 volunteer human raters in total, each comparing 25 summaries (one volunteer completed the survey late and was not included in the final analysis, but is listed here). The raters were Stanford students (from undergrad through Ph.D.), or recent Stanford graduates or visitors, with a STEM (mainly CS) focus. See Figure 5 for a screenshot of the survey interface. We gratefully acknowledge the contribution of each of our volunteers, listed in random order:

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.

[7] One volunteer did not respond for the DPO-PPO comparison.