Authors:

(1) Prerak Gandhi, Department of Computer Science and Engineering, Indian Institute of Technology Bombay, Mumbai, prerakgandhi@cse.iitb.ac.in, and these authors contributed equally to this work;

(2) Vishal Pramanik, Department of Computer Science and Engineering, Indian Institute of Technology Bombay, Mumbai, vishalpramanik,pb@cse.iitb.ac.in, and these authors contributed equally to this work;

(3) Pushpak Bhattacharyya, Department of Computer Science and Engineering, Indian Institute of Technology Bombay, Mumbai.

Table of Links

- Abstract and Intro

- Motivation

- Related Work

- Dataset

- Experiments and Evaluation

- Results and Analysis

- Conclusion and Future Work

- Limitations and References

- A. Appendix

A. Appendix

A.1. Ethics Consideration

We have taken all the scripts from IMDB and IMSDb databases. The website has a disclaimer regarding using its scripts for research, which can be found at this link https://imsdb.com/ disclaimer.html. We have used the scripts fairly and without copyright violation.

A.2. Annotator Profiles

We required the help of external annotators in two cases: (i) Manually Annotating the Scripts and (ii) Creating scenes and their descriptions from the scripts. For the first task, we took the help of 10 annotators. Their ages ranged from 21-28, and all were Asian. They were given detailed guidelines with examples for annotating. There were also periodic sessions to confirm their understanding and solve their doubts and mistakes. For the second task, we took the help of two annotators. Both of them are Asian females aged between 21-23. Both of them were given detailed guidelines for the scene-writing task. A few data points were picked randomly and checked to find out and correct conceptual mistakes. The annotators had bachelors and masters degree in STEM and Arts.

A.3. Evaluation Metrics

The evaluation metrics are described below:

• Perplexity (PPL): Perplexity is one of the most common metrics for evaluating language models. They are computed as exponential of entropy. The smaller the value of the PPL, the greater the fluency of the generated text.

• BLEU: BiLingual Evaluation Understudy is a common metric in many NLP tasks, especially in the field of Machine Translation. It measures the overlap between the generated output and gold standard data. Although this metric does not consider the model’s creativity, we can deduce the difference between the candidate text and the reference text using BLEU. The higher the BLEU measure, the better it is.

• ROUGE: Recall-Oriented Understudy for Gisting Evaluation is typically used for evaluating automatic summarization. In our case, it measures the longest overlapping sequence between the generated and original plots. The higher the ROUGE measure, the better it is.

• N-grams: We measure the redundancy and diversity of the movie plots by computing the repetition and distinction n-gram scores.

A.4. Screenplay Structure

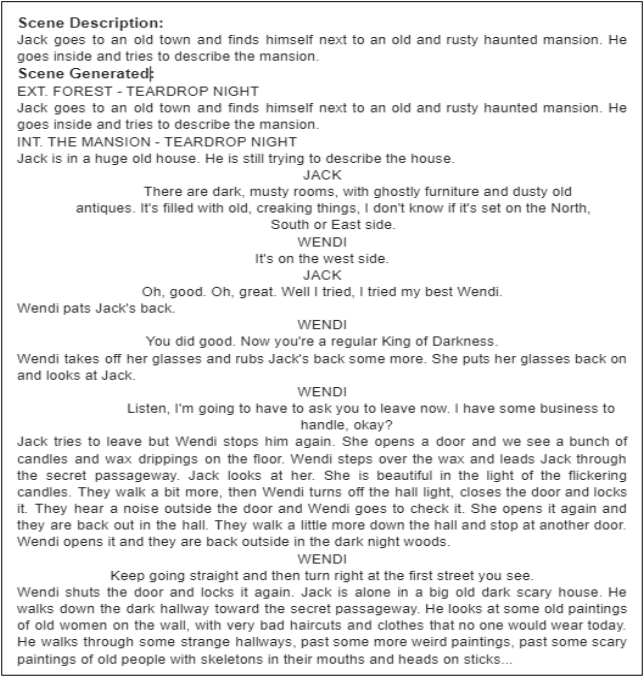

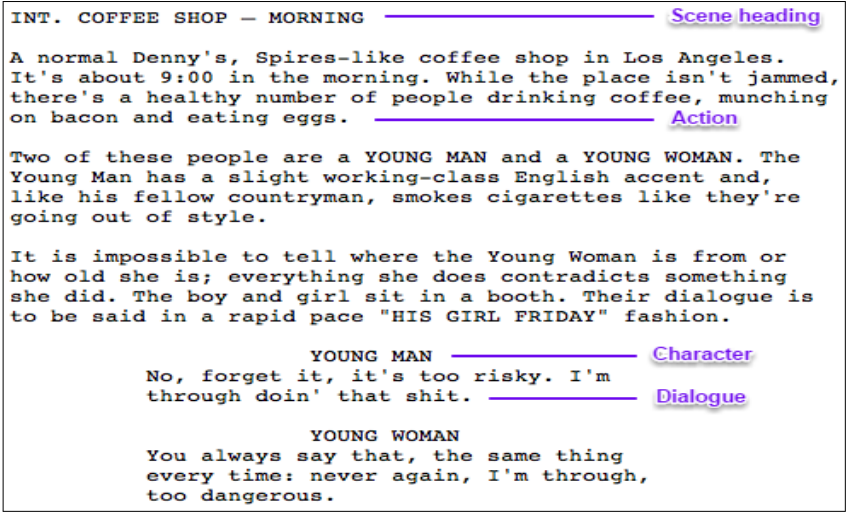

A movie script or a screenplay has a different format than a story. A script is a group of scenes. Each of these scenes consists of a few major components, which are discussed below:

Scene Headings/Sluglines- This component describes the when and where of the scene. It can be thought of as the first shot that a camera takes of a new scene. For example, INT. - RESTAURANT - NIGHT indicates that the scene starts inside a restaurant at night. Sluglines are normally written in capital letters and are left-aligned.

Character Names- they are mentioned every time a character is going to utter a dialogue. The name of each character is mentioned in uppercase and is centre aligned.

Dialogues- dialogues are the lines that the characters say. They appear right after the character name in a script and are centrally aligned.

Action Lines- action lines describe almost everything about a scene. They can be described as the narration of each script. Action lines can be present after either dialogues or sluglines and are left-aligned.

Transitions- a transition marks the change from one scene to the next. They also depict how a scene is ended. For example, DISSOLVE, FADE, and CUT are different keywords used to indicate a transition. They are usually in upper case and are right-aligned.

Figure 8 shows an example of the screenplay elements.

A.5. Story Templates

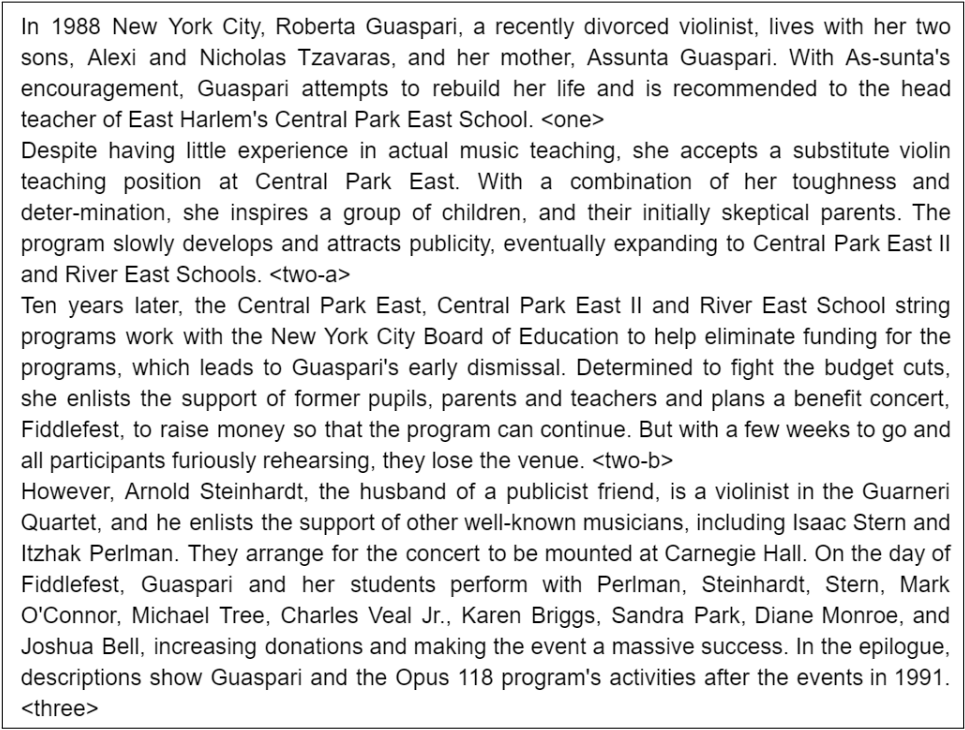

Over time various templates have been developed that help to create stories. One of the most famous templates is the 3-act structure (Field, 1979). This structure divides a story into a setup, confrontation, and resolution. In this work, we have used the 4-act structure which we now describe in detail.

Act 1- This is the opening/introduction act. It describes the protagonist’s character and briefly introduces the movie’s theme. The act ends with the start of a new journey for the protagonist.

Act 2A- Due to the vast span of Act 2, it can be divided into two acts. This act usually contains the start of a love story. It also entertains the audience as the protagonist tries to adapt to their new journey. The act ends as the movie’s midpoint, one of the film’s critical moments, with either a very positive or negative scene.

Act 2B- This act usually contains the protagonist’s downfall. The villain or antagonist starts to gain an advantage, and the protagonist loses something or someone significant. The act ends with the protagonist realizing their new mission after reaching rock bottom.

Act 3— The protagonist has realized the change required in them and sets out to defeat the antagonist in a thrilling finale. The movie then ends by displaying a welcome change in the protagonist that was lacking in the beginning.

A.6. Fine-Tuning GPT-3

GPT-3 was deemed publicly available last year by OpenAI (Brown et al., 2020). Its best model has 175B parameters, which is much more than GPT2’s 2.9B parameters. We have fine-tuned multiple plot generation models with GPT-3 along with a scene generation model. The multiple combinations of plot generation models are short or long prompts and with or without genres. The GPT-3 model and hyperparameters remain the same for all the above combinations. We have fine-tuned the GPT-3 Curie model for four epochs. For generating text, GPT-3 offers various hyperparameters to tune and get closer to our desired results. For testing, we set other hyperparameters as follows: the temperature as 0.7, top-p as 1, frequency penalty as 0.1, presence penalty as 0.1, and max tokens as 900.

This paper is available on arxiv under CC 4.0 DEED license.