Author:

(1) Mingda Chen.

Table of Links

-

3.1 Improving Language Representation Learning via Sentence Ordering Prediction

-

3.2 Improving In-Context Few-Shot Learning via Self-Supervised Training

-

4.2 Learning Discourse-Aware Sentence Representations from Document Structures

-

5 DISENTANGLING LATENT REPRESENTATIONS FOR INTERPRETABILITY AND CONTROLLABILITY

-

5.1 Disentangling Semantics and Syntax in Sentence Representations

-

5.2 Controllable Paraphrase Generation with a Syntactic Exemplar

4.3 Learning Concept Hierarchies from Document Categories

4.3.1 Introduction

Natural language inference (NLI) is the task of classifying the relationship, such as entailment or contradiction, between sentences. It has been found useful in downstream tasks, such as summarization (Mehdad et al., 2013) and long-form text generation (Holtzman et al., 2018). NLI involves rich natural language understanding capabilities, many of which relate to world knowledge. To acquire such knowledge, researchers have found benefit from external knowledge bases like WordNet (Fellbaum, 1998), FrameNet (Baker, 2014), Wikidata (Vrandeciˇ c and Kr ´ otzsch ¨ , 2014), and large-scale human-annotated datasets (Bowman et al., 2015; Williams et al., 2018; Nie et al., 2020). Creating these resources generally requires expensive human annotation. In this work, we are interested in automatically generating a large-scale dataset from Wikipedia categories that can improve performance on both NLI and lexical entailment (LE) tasks.

One key component of NLI tasks is recognizing lexical and phrasal hypernym relationships. For example, vehicle is a hypernym of car. In this paper, we take advantage of the naturally-annotated Wikipedia category graph, where we observe that most of the parent-child category pairs are entailment relationships, i.e., a child category entails a parent category. Compared to WordNet and Wikidata, the Wikipedia category graph has more fine-grained connections, which could be helpful for training models. Inspired by this observation, we construct WIKINLI, a dataset for training NLI models constructed automatically from the Wikipedia category graph, by automatic filtering from the Wikipedia category graph. The dataset has 428,899 pairs of phrases and contains three categories that correspond to the entailment and neutral relationships in NLI datasets.

To empirically demonstrate the usefulness of WIKINLI, we pretrain BERT and RoBERTa on WIKINLI, WordNet, and Wikidata, before finetuning on various LE and NLI tasks. Our experimental results show that WIKINLI gives the best performance averaging over 8 tasks for both BERT and RoBERTa.

We perform an in-depth analysis of approaches to handling the Wikipedia category graph and the effects of pretraining with WIKINLI and other data sources under different configurations. We find that WIKINLI brings consistent improvements in a low resource NLI setting where there are limited amounts of training data, and the improvements plateau as the number of training instances increases; more WIKINLI instances for pretraining are beneficial for downstream finetuning tasks with pretraining on a fourway variant of WIKINLI showing more significant gains for the task requiring higher-level conceptual knowledge; WIKINLI also introduces additional knowledge related to lexical relations benefiting finer-grained LE and NLI tasks.

We also construct WIKINLI in other languages and benchmark several resources on XNLI (Conneau et al., 2018b), showing that WIKINLI benefits performance on NLI tasks in the corresponding languages.

4.3.2 Related Work

We build on a rich body of literature on leveraging specialized resources (such as knowledge bases) to enhance model performance. These works either (1) pretrain the model on datasets extracted from such resources, or (2) use the resources directly by changing the model itself.

The first approach aims to improve performance at test time by designing useful signals for pretraining, for instance using hyperlinks (Logeswaran et al., 2019; Chen et al., 2019a) or document structure in Wikipedia (Chen et al., 2019b), knowledge bases (Logan et al., 2019), and discourse markers (Nie et al., 2019). Here, we focus on using category hierarchies in Wikipedia. There are some previous works that also use category relations derived from knowledge bases (Shwartz et al., 2016; Riedel et al., 2013), but they are used in a particular form of distant supervision in which they are matched with an additional corpus to create noisy labels. In contrast, we use the category relations directly without requiring such additional steps. Onoe and Durrett (2020) use the direct parent categories of hyperlinks for training entity linking systems.

Within this first approach, there have been many efforts aimed at harvesting inference rules from raw text (Lin and Pantel, 2001; Szpektor et al., 2004; Bhagat et al., 2007; Szpektor and Dagan, 2008; Yates and Etzioni, 2009; Bansal et al., 2014; Berant et al., 2015; Hosseini et al., 2018). Since WIKINLI uses category pairs in which one is a hyponym of the other, it is more closely related to work in extracting hyponymhypernym pairs from text (Hearst, 1992; Snow et al., 2005, 2006; Pasca and Durme, 2007; McNamee et al., 2008; Le et al., 2019). Pavlick et al. (2015) automatically generate a large-scale phrase pair dataset with several relationships by training classifiers on a relatively small amount of human-annotated data. However, most of this prior work uses raw text or raw text combined with either annotated data or curated resources like WordNet. WIKINLI, on the other hand, seeks a middle road, striving to find large-scale, naturally-annotated data that can improve performance on NLI tasks.

The second approach aims to enable the model to leverage knowledge resources during prediction, for instance by computing attention weights over lexical relations in WordNet (Chen et al., 2018b) or linking to reference entities in knowledge bases within the transformer block (Peters et al., 2019b). While effective, this approach requires nontrivial and domain-specific modifications of the model itself. In contrast, we develop a simple pretraining method to leverage knowledge bases that can likewise improve the performance of already strong baselines such as BERT without requiring such complex model modifications.

4.3.3 Experimental Setup

To demonstrate the effectiveness of WIKINLI, we pretrain BERT and RoBERTa on WIKINLI and other resources, and then finetune them on several NLI and LE tasks. We assume that if a pretraining resource is better aligned with downstream tasks, it will lead to better downstream performance of the models pretrained on it.

Training. Following Devlin et al. (2019) and Liu et al. (2019), we use the concatenation of two texts as the input to BERT and RoBERTa. Specifically, for a pair of input texts x1, x2, the input would be [CLS]x1[SEP]x2[SEP]. We use the encoded representations at the position of [CLS] as the input to a two-layer classifier, and finetune the entire model.

We start with a pretrained BERT-large or RoBERTa-large model and further pretrain it on different pretraining resources. After that, we finetune the model on the training sets for the downstream tasks, as we will elaborate on below.

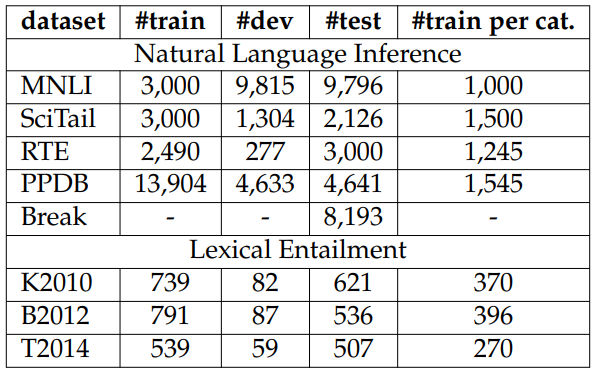

Evaluation. We use several NLI and LE datasets. Statistics for these datasets are shown in Table 4.9 and details are provided below.

MNLI. The Multi-Genre Natural Language Inference (MNLI; Williams et al., 2018) dataset is a human-annotated multi-domain NLI dataset. MNLI has three categories: entailment, contradiction, and neutral. Since the training split for this dataset has a large number of instances, models trained on it are capable of picking up information needed regardless of the quality of the pretraining resources we compare, which makes the effects of pretraining resources negligible. To better compare pretraining resources, we simulate a low-resource scenario by randomly sampling 3,000 instances[8] from the original training split as our new training set, but use the standard “matched” development and testing splits.

SciTail. SciTail is created from science questions and the corresponding answer candidates, and premises from relevant web sentences retrieved from a large corpus (Khot et al., 2018). SciTail has two categories: entailment and neutral. Similar to MNLI, we randomly sample 3,000 instances from the training split as our training set.

RTE. We evaluate models on the GLUE (Wang et al., 2018a) version of the recognizing textual entailment (RTE) dataset (Dagan et al., 2006; Bar-Haim et al., 2006; Giampiccolo et al., 2007; Bentivogli et al., 2009). RTE is a binary task, focusing on identifying if a pair of input sentences has the entailment relation.

PPDB. We use the human-annotated phrase pair dataset from Pavlick et al. (2015), which has 9 text pair relationship labels. The labels are: hyponym, hypernym, synonym, antonym, alternation, other-related, NA, independent, and none. We directly use phrases in PPDB to form input data pairs. We include this dataset for more finegrained evaluation. Since there is no standard development or testing set for this dataset, we randomly sample 60%/20%/20% as our train/dev/test sets.

Break. Glockner et al. (2018) constructed a challenging NLI dataset called “Break” using external knowledge bases such as WordNet. Since sentence pairs in the dataset only differ by one or two words, similar to a pair of adversarial examples, it has broken many NLI systems.

Due to the fact that Break does not have a training split, we use the aforementioned subsampled MNLI training set as a training set for this dataset. We select the best performing model on the development set of MNLI and evaluate it on Break.

Lexical Entailment. We use the lexical splits for 3 datasets from Levy et al. (2015), including K2010 (Kotlerman et al., 2009), B2012 (Baroni et al., 2012), and T2014 (Turney and Mohammad, 2015). These datasets all similarly formulate lexical entailment as a binary task, and they were constructed from diverse sources, including human annotations, WordNet, and Wikidata.

Baselines. We consider three baselines for both BERT and RoBERTa, namely the original model, the model pretrained on WordNet, and the model pretrained on Wikidata.

WordNet is a widely-used lexical knowledge base, where words or phrases are connected by several lexical relations. We consider direct hyponym-hypernym relations available from WordNet, resulting in 74,645 pairs.

Wikidata is a database that stores items and relations between these items. Unlike WordNet, Wikidata consists of items beyond word types and commonly seen phrases, offering more diverse domains similar to WIKINLI. The available conceptual relations in Wikidata are: “subclass of” and “instance of”. In this work, we consider the “subclass of” relation in Wikidata because (1) it is the most similar relation to category hierarchies from Wikipedia; (2) the relation “instance of” typically involves more detailed information, which is found less useful empirically (see the supplementary material for details). The filtered data has 2,871,194 pairs.

We create training sets from both WordNet and Wikidata following the same procedures used to create WIKINLI. All three datasets are constructed from their corresponding parent-child relationship pairs. Neutral pairs are first randomly sampled from non-ancestor-descendant relationships and top ranked pairs according to cosine similarities of ELMo embeddings are kept. We also ensure these datasets are balanced among the three classes. Code and data are available at https://github.com/ZeweiChu/WikiNLI.

4.3.4 Experimental Results

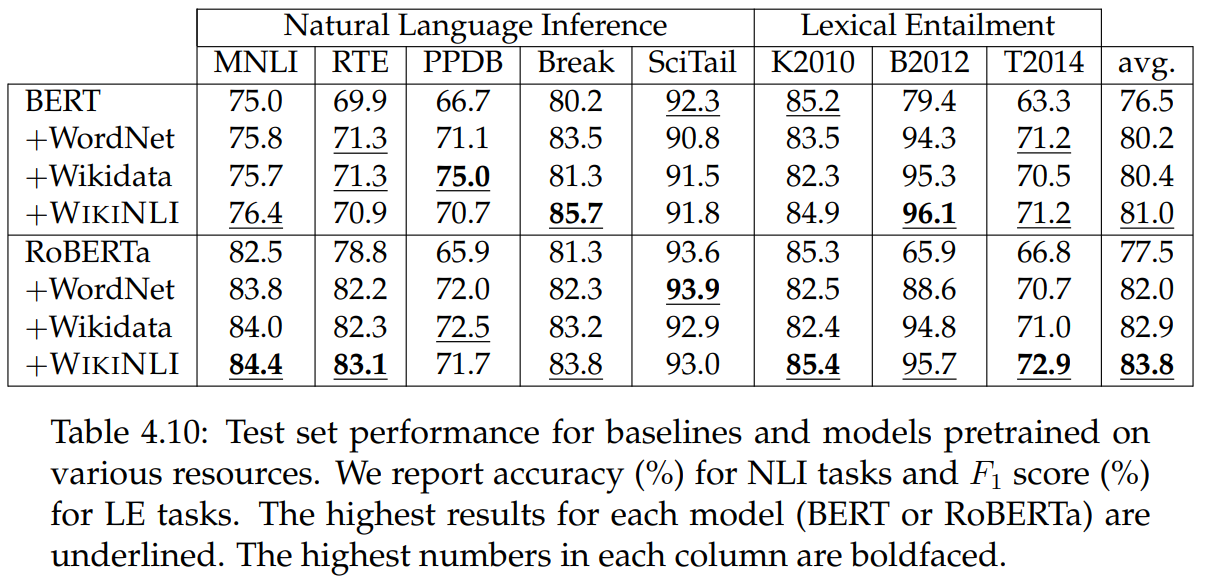

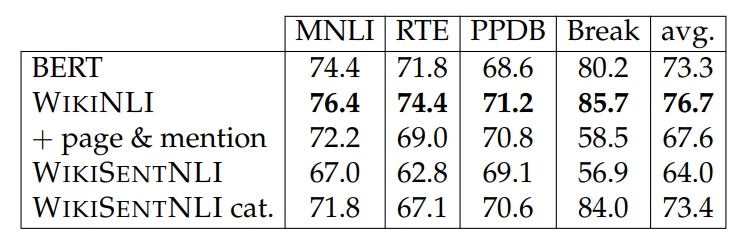

The results are summarized in Table 4.10. In general, pretraining on WIKINLI, Wikidata, or WordNet improves the performances on downstream tasks, and pretraining on WIKINLI achieves the best performance on average. Especially for Break and MNLI, WIKINLI can lead to much more substantial gains than the other two resources. Although BERT-large + WIKINLI is not better than the baseline BERT-large on RTE, RoBERTa + WIKINLI shows much better performance. Only on PPDB, Wikidata is consistently better than WIKINLI. We note that BERT-large + WIKINLI still shows a sizeable improvement over the BERT-large baseline. More importantly, the improvements to both BERT and RoBERTa brought by WIKINLI show that the benefit of the WIKINLI dataset can generalize to different models. We also note that pretraining on these resources has little benefit for SciTail.

4.3.5 Analysis

We perform several kinds of analysis, including using BERT to compare the effects of different settings. Due to the submission constraints of the GLUE leaderboard, we will report dev set results (medians of 5 runs) for the tables in this section, except for Break which is only a test set.

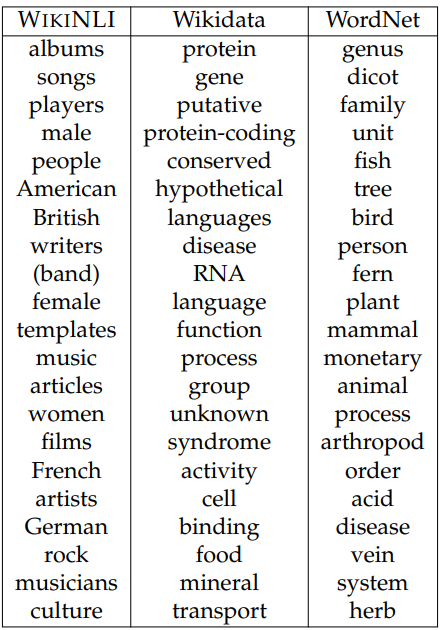

Lexical Analysis. To qualitatively investigate the differences between WIKINLI, Wikidata, and WordNet, we list the top 20 most frequent words in these three resources in Table 4.11. Interestingly, WordNet contains mostly abstract words, such as “unit”, “family”, and “person”, while Wikidata contains many domain-specific words, such as “protein” and “gene”. In contrast, WIKINLI strikes a middle ground, covering topics broader than those in Wikidata but less generic than those in WordNet.

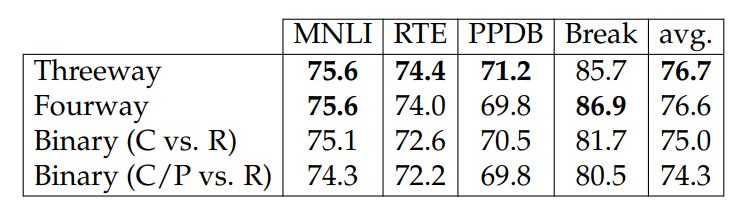

Fourway vs. Threeway vs. Binary Pretraining. We investigate the effects of the number of categories for WIKINLI by empirically comparing three settings: fourway, threeway, and binary classification. For fourway classification, we add an extra relation “sibling” in addition to child, parent, and neutral relationships. A sibling

pair consists of two categories that share the same parent. We also ensure that neutral pairs are non-siblings, meaning that we separate a category that was considered as part of the neutral relations to provide a more fine-grained pretraining signal.

We construct two versions of WIKINLI with binary class labels. One classifies the child against the rest, including parent, neutral, and sibling (“child vs. rest”). The other classifies child or parent against neutral or sibling (“child/parent vs. rest”). The purpose of these two datasets is to find if a more coarse training signal would reduce the gains from pretraining.

These dataset variations are each balanced among their classes and contain 100,000 training instances and 5,000 development instances.

Table 4.12 shows results of MNLI, RTE, and PPDB. Overall, fourway and threeway classifications are comparable, although they excel at different tasks. Interestingly, we find that pretraining with child/parent vs. rest is worse than pretraining

with child vs. rest. We suspect this is because the child/parent vs. rest task resembles topic classification. The model does not need to determine direction of entailment, but only whether the two phrases are topically related, as neutral pairs are generally either highly unrelated or only vaguely related. The child vs. rest task still requires reasoning about entailment as the models still need to differentiate between child and parent.

In addition, we explore pruning levels in Wikipedia category graphs, and incorporating sentential context, finding that relatively higher levels of knowledge from WIKINLI have more potential of enhancing the performance of NLI systems and sentential context shows promising results on the Break dataset (see supplementary material for more details).

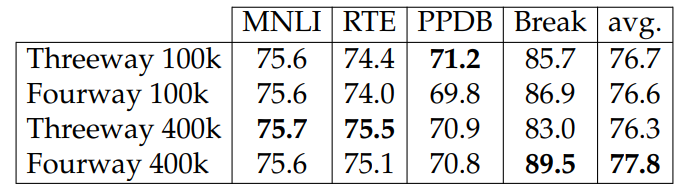

Larger Training Sets. We train on larger numbers of WIKINLI instances, approximately 400,000, for both threeway and fourway classification. We note that we only pretrain models on WIKINLI for one epoch as it leads to better performance on downstream tasks. The results are in Table 4.13. We observe that except for PPDB, adding more data generally improves performance. For Break, we observe significant improvements when using fourway WIKINLI for pretraining, whereas threeway WIKINLI seems to hurt the performance.

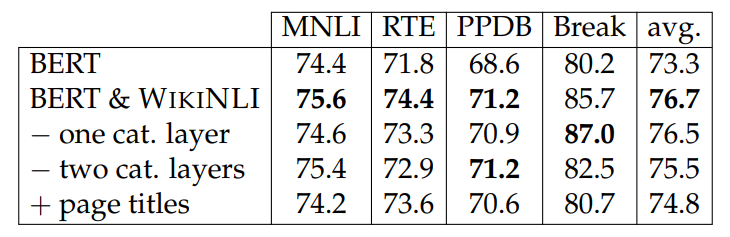

Wikipedia Pages, Mentions, and Layer Pruning. The variants of WIKINLI we considered so far have used categories as the lowest level of hierarchies. We are interested in whether adding Wikipedia page titles would bring in additional knowledge for inference tasks.

We experiment with including Wikipedia page titles that belong to Wikipedia categories to WIKINLI. We treat these page titles as the leaf nodes of the WIKINLI dataset. Their parents are the categories that the pages belong to.

Although Wikipedia page titles are additional source of information, they are more specific compared to Wikipedia categories. A majority of Wikipedia page titles are person names, locations, or historical events. They are not general summaries of concepts. To explore the effect of more general concepts, we try pruning leaf nodes from the WIKINLI category hierarchies. As higher-level nodes are more general and abstract concepts compared to lower-level nodes, we hypothesize that pruning leaf nodes would make the model learn higher-level concepts. We experiment with pruning one layer and two layers of leaf nodes in WIKINLI category hierarchies.

Table 4.14 compares the results of adding page titles and pruning different numbers of layers. Adding page titles mostly gives relatively small improvements to the model performance on downstream tasks, which shows that the page title is not a useful addition to WIKINLI. Pruning layers also slightly hurts the model performance. One exception is Break, which shows that solving it requires knowledge of higher-level concepts.

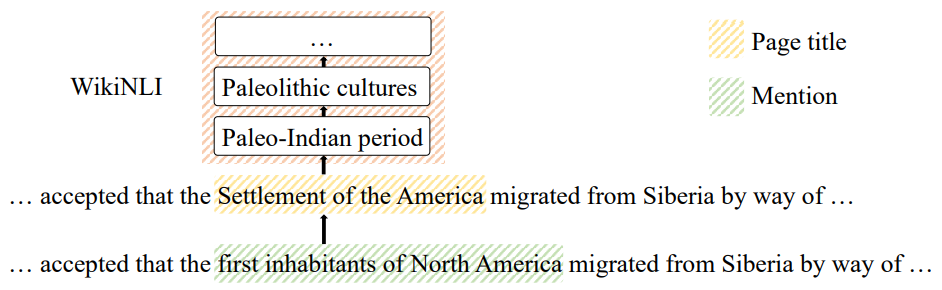

WIKISENTNLI. To investigate the effect of sentential context, we construct another dataset, which we call WIKISENTNLI, that is made up of full sentences. The general idea is to create sentence pairs that only differ by several words by using the hyper

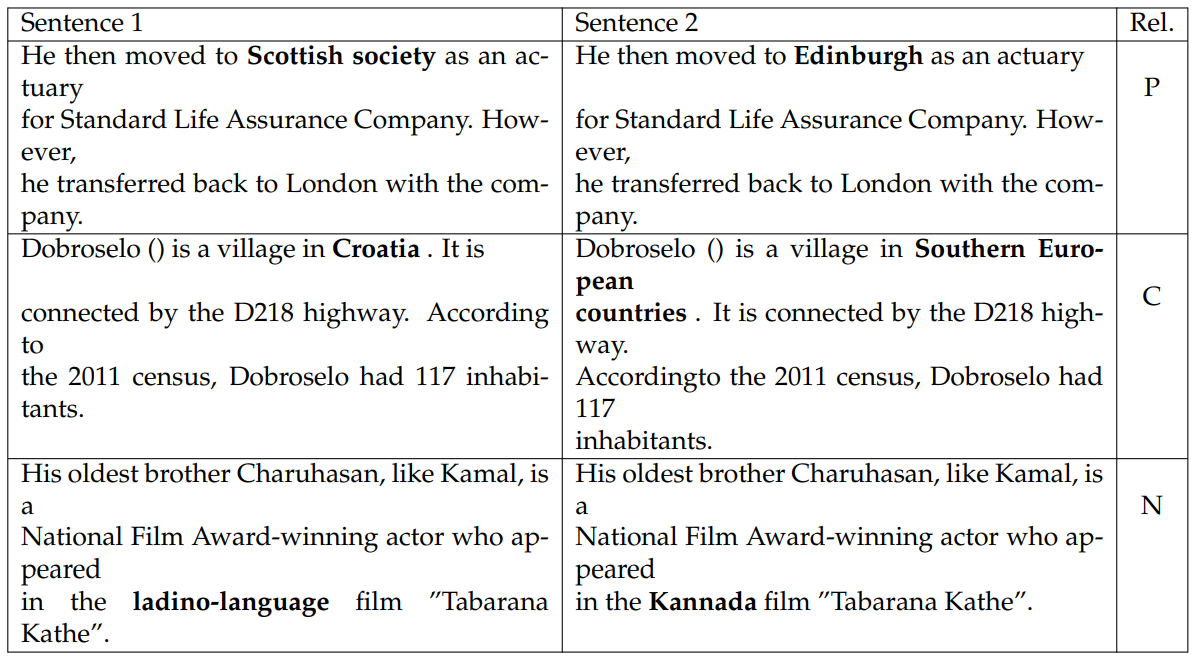

links in the Wikipedia sentences. More specifically, for a sentence with a hyperlink (if there are multiple hyperlinks, we will consider them as different instances), we form new sentences by replacing the text mention (marked by the hyperlink) with the page title as well as the categories describing that page. We consider these two sentences forming candidate child-parent relationship pairs. An example is shown in Fig. 4.5. As some page titles or category names do not fit into the context of the sentence, we score them by BERT-large, averaging over the loss spanning that page title or category name. We pick the candidate with the lowest loss. To generate neutral pairs, we randomly sample 20 categories for a particular page mention in the text and pick the candidate with the lowest loss by BERT-large. WIKISENTNLI is also balanced among three relations (child, parent and neutral), and we experiment with 100k training instances and 5k development instances. Table 4.15 are some examples from WIKISENTNLI.

Table 4.16 shows the results. In comparing WIKINLI to WIKISENTNLI, we observe that adding extra context to WIKINLI does not help on the downstream tasks. It is worth noting that the differences between WIKINLI and WIKISENTNLI are more than sentential context. The categories we considered in WIKISENTNLI are always immediately after Wikipedia pages, limiting the exposure of higher-level categories.

To look into the importance of those categories, we construct another version of WIKISENTNLI by treating the mentions and page title layer as the same level (“WIKISENTNLI cat.”). This effectively gives models pretrained on this version of WIKISENTNLI access to higher-level categories. Practically, when creating child sentences, we randomly choose between keeping the original sentences or replacing the text mention with its linked page title. When creating parent sentences, we replace the text mention with the parent categories of the linked page. Then, we perform the same steps as described in the previous paragraph. Pretraining on WIKISENTNLI cat. gives a sizable improvement compared to pretraining on WIKISENTNLI.

Additionally, we try to add mentions to WIKINLI, which seems to impair the model performance greatly. This also validates our claim that specific knowledge tends to be noisy and less likely to be helpful for downstream tasks. More interestingly, these variants seem to affect Break the most, which is in line with our previous finding that Break favors higher-level knowledge. While most of our findings with

sentential context are negative, the WIKISENTNLI cat. variant shows promising improvements over BERT in some of the downstream tasks, demonstrating that a more appropriate way of incorporating higher-level categories can be essential to benefit from WIKISENTNLI in practice.

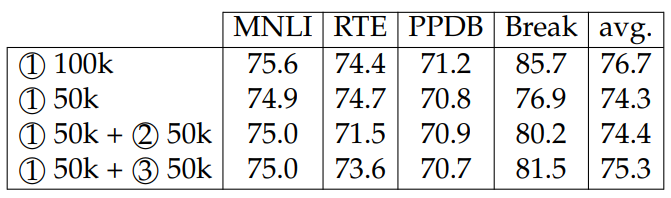

Combining Multiple Data Sources. We combine multiple data sources for pretraining. In one setting we combine 50k instances of WIKINLI with 50k instances of WordNet, while in the other setting we combine 50k instances of WIKINLI with 50k instances of Wikidata. Table 4.17 compares these two settings for pretraining. WIKINLI works the best when pretrained alone.

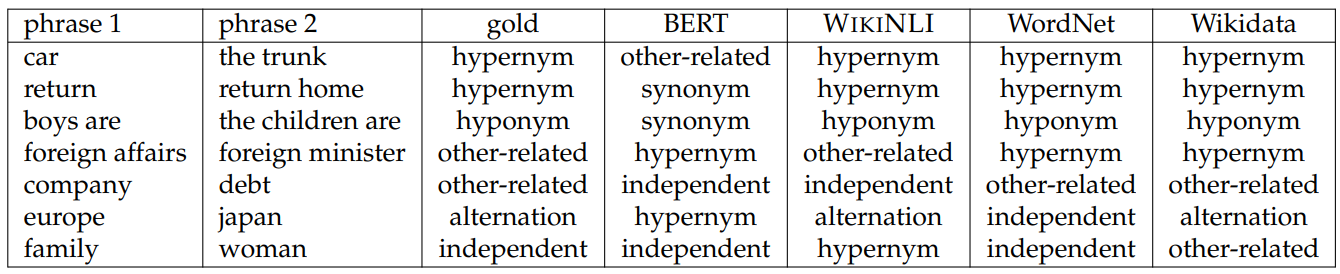

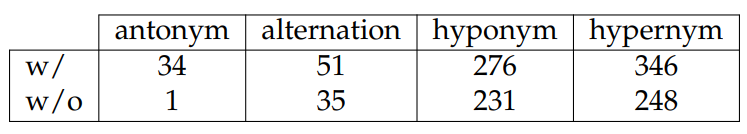

Effect of Pretraining Resources. We show several examples of predictions from PPDB in Table 4.18. In general, we observe that without pretraining, BERT tends to predict symmetric categories, such as synonym, or other-related, instead of predicting entailment-related categories. For example, the phrase pair “car” and “the trunk”, “return” and “return home”, and “boys are” and “the children are”. These are either “hypernym” or “hyponym” relationship, but BERT tends to conflate them with symmetric relationships, such as other-related. To quantify this hypothesis, we compute the numbers of correctly predicted antonym, alternation, hyponym and hypernym and show them in Table 4.19. [9] It can be seen that with pretraining those numbers increase dramatically, showing the benefit of pretraining on these resources.

We also observe that the model performance can be affected by the coverage of pretraining resources. In particular, for phrase pair “foreign affairs” and “foreign minister”, WIKINLI has a closely related term “foreign affair ministries” and “foreign minister” under the category “international relations”, whereas WordNet does not have these two, and Wikidata only has “foreign minister”.

As another example, consider the phrase pair “company” and “debt”. In WIKINLI, “company” is under the “business” category and debt is under the “finance” category. They are not directly related. In WordNet, due to the polysemy of “company”, “company” and “debt” are both hyponyms of “state”, and in Wikidata, they are both a subclass of “legal concept”.

For the phrase pair “family”/“woman”, in WIKINLI, “family” is a parent category of “wives”, and in Wikidata, they are related in that “family” is a subclass of “group of humans”. In contrast, WordNet does not have such knowledge.

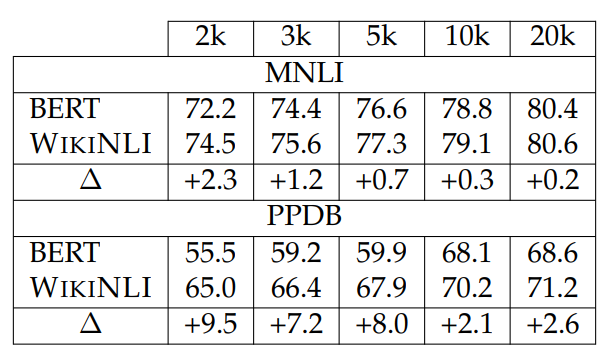

Finetuning with Different Amounts of Data. We now look into the relationship between the benefit of WIKINLI and the number of training instances from downstream tasks (Table 4.20). We compare BERT-large to BERT-large pretrained on WIKINLI when finetuning on 2k, 3k, 5k, 10k, and 20k MNLI or PPDB training instances accordingly. In general, the results show that WIKINLI has more significant improvement with less training data, and the gap between BERT-large and WIKINLI narrows as the training data size increases. We hypothesize that the performance gap does not reduce as expected between 3k and 5k or 10k and 20k due in part to the imbalanced number of instances available for the categories. For example, even when using 20k training instances, some of the PPDB categories are still quite rare.

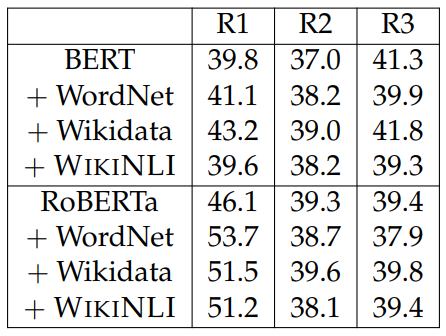

Evaluating on Adversarial NLI. Adversarial NLI (ANLI; Nie et al., 2020) is collected via an iterative human-and-model-in-the-loop procedure. ANLI has three rounds that progressively increase the difficulty. When finetuning the models for each round, we use the sampled 3k instances from the corresponding training set, perform early stopping on the original development sets, and report results on the original test sets. As shown in Table 4.21, our pretraining approach has diminishing effect as the round number increases. This may due to the fact that humans deem the NLI instances that require world knowledge as the hard ones, and therefore when the round number increases, the training set is likely to have more such instances, which makes pretraining on similar resources less helpful. Table 4.21 also shows that WordNet and Wikidata show stronger performance than WIKINLI. We hypothesize that this is because ANLI has a context length almost 3 times longer than MNLI on average, in which case our phrase-based resources or pretraining approach are not optimal choices. Future research may focus on finding better ways to incorporate sentential context into WIKINLI. For example, we experiment with such a variant of WIKINLI (i.e., WIKISENTNLI) in the supplementary material.

We have similar observations that our phrase-based pretraining has complicated effect (e.g., only part of the implicature results shows improvements) when evaluating these resources on IMPPRES (Jeretic et al., 2020), which focuses on the information implied in the sentential context (please refer to the supplementary materials for more details).

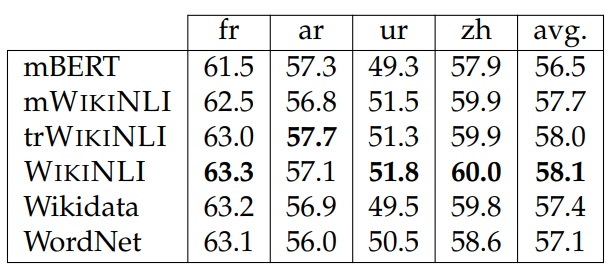

4.3.6 Multilingual WIKINLI

Wikipedia has different languages, which naturally motivates us to extend WIKINLI to other languages. We mostly follow the same procedures as English WIKINLI to construct a multilingual version of WIKINLI from Wikipedia in other languages, except that (1) we filter out instances that contain English words for Arabic, Urdu, and Chinese; and (2) we translate the keywords into Chinese when filtering the Chinese WIKINLI. We will refer to this version of WIKINLI as “mWIKINLI”. As a baseline, we also consider “trWIKINLI”, where we translate the English WIKINLI into other languages using Google Translate. We benchmark these resources on XNLI in four languages: French (fr), Arabic (ar), Urdu (ur), and Chinese (zh). When reporting these results, we pretrain multilingual BERT (mBERT; Devlin et al., 2019) on the corresponding resources, finetune it on 3000 instances of the training set, perform early stopping on the development set, and test it on the test set. We always use XNLI from the corresponding language. In addition, we pretrain mBERT on English WIKINLI, Wikidata, and WordNet, finetune and evaluate them on other languages using the same language-specific 3000 NLI pairs mentioned earlier. We note that when pretraining on mWIKINLI or trWIKINLI, we use the versions of these datasets with the same languages as the test sets.

The accuracy differences between mWIKINLI and WIKINLI could be partly attributed to domain differences across languages. To measure the differences, we compile a list of the top 20 most frequent words in the Chinese mWIKINLI, shown in Table Table 4.23. The most frequent words for mWIKINLI in Chinese are mostly related to political concepts, whereas WIKINLI offers a broader range of topics.

Future research will be required to obtain a richer understanding of how training on WIKINLI benefits non-English languages more than training on the languagespecific mWIKINLI resources. One possibility is the presence of emergent crosslingual structure in mBERT (Conneau et al., 2020b). Nonetheless, we believe mWIKINLI and our training setup offer a useful framework for further research into multilingual learning with pretrained models.

This paper is available on arxiv under CC 4.0 license.

[8] The number of training instances is chosen based on the number of instances per category, as shown in the last column of Table 4.9, where we want the number to be close to 1-1.5K.

[9] We observed similar trends when pretraining on the other resources.