Author:

(1) Mingda Chen.

Table of Links

-

3.1 Improving Language Representation Learning via Sentence Ordering Prediction

-

3.2 Improving In-Context Few-Shot Learning via Self-Supervised Training

-

4.2 Learning Discourse-Aware Sentence Representations from Document Structures

-

5 DISENTANGLING LATENT REPRESENTATIONS FOR INTERPRETABILITY AND CONTROLLABILITY

-

5.1 Disentangling Semantics and Syntax in Sentence Representations

-

5.2 Controllable Paraphrase Generation with a Syntactic Exemplar

APPENDIX B - APPENDIX TO CHAPTER 6

B.1 Details about Dataset Construction for WIKITABLET

When collecting data, we consider five resources: Wikidata tables, infoboxes in Wikipedia pages, hyperlinks in the passage, named entities in the passage obtained from named entity recognition (NER), and Wikipedia article structure. For each article in Wikipedia, we use the same infobox and Wikidata table for all sections. These tables can serve as background knowledge for the article. For each section in the article, we create a second table corresponding to section-specific data, i.e., section data. The section data contains records constructed from hyperlinks and entities identified by a named entity recognizer. Section data contributes around 25% of the records in WIKITABLET.

We filter out several entity types related to numbers[1] as the specific meanings of these numbers in the section of interest are difficult to recover from the information in the tables. After filtering, we use the identified entities as the values and the entity types as the attributes. This contributes roughly 12% of the records in our final dataset.

We also create records from hyperlinks in the section of interest. We first expand the hyperlinks available for each section with hyperlinks available in the parent categories. We first group hyperlinks across all Wikipedia articles with those same categories, and then we perform string matching between these hyperlinks and the text in the section. If there are exact matches, we will include those hyperlinks as part of the hyperlinks in this section.

Details for constructing a record with attribute a and value v for a hyperlink with surface text t and hyperlinked article ` are as follows. To set a, we use the value of the “instance of” or “subclass of” tuple in the Wikidata table for `. If ` does not have a Wikidata table or no appropriate tuple, we consider the parent categories of ` as candidates for a. If there are multiple candidates for a, we first embed these candidates and a using GloVe embeddings and then choose the one that maximizes cosine similarity between the document titles or section titles and the candidates for a. For the value v of the tuple, we use the document title of ` rather than the actual surface text t to avoid giving away too much information in the reference text. The records formed by hyperlinks contribute approximately 13% of the records in WIKITABLET.

We shuffle the ordering of the records from NER and the hyperlinks to prevent models from relying on the ordering of records in the reference text.

The records from the section data can be seen as section-specific information that can make the task more solvable. Complementary to the article data, we create a title table that provides information about the position in which the section is situated, which includes the article title and the section titles for the target section. As the initial sections in Wikipedia articles do not have section titles, we use the section title “Introduction” for these.[2]

As the records in our data tables come from different resources, we perform extra filtering to remove duplicates in the records. In particular, we give Wikidata the highest priority as it is a human-annotated well-structured data resource (infoboxes are human-annotated but not well-structured due to the way they are stored on Wikipedia) and the entities from NER the lowest priority as they are automatically constructed. That is, when we identify duplicates across different resources, we will keep the records from the higher priority resource and drop those from the lower one. More specifically, the duplicates between Wikidata records and infoboxes are determined by whether there are duplicate values or duplicate attributes: for hyperlinks and infoboxes or Wikidata, they are judged by duplicate values; for NER and hyperlinks, they are based on whether there is any token overlapping between values.

After table collection, we have the following criteria for filtering out the texts: (1) we limit the text length to be between 50 and 1000 word tokens; (2) to ensure that there is sufficient information in the table, we only keep data-text pairs that contain more than 2 records per sentence and more than 15 records per 100 tokens from Wikidata and infoboxes; (3) to avoid texts such as lists of hyperlinks, we filter out texts where more than 50% of their word tokens are from hyperlink texts.

B.2 Human Evaluation for WIKITABLET

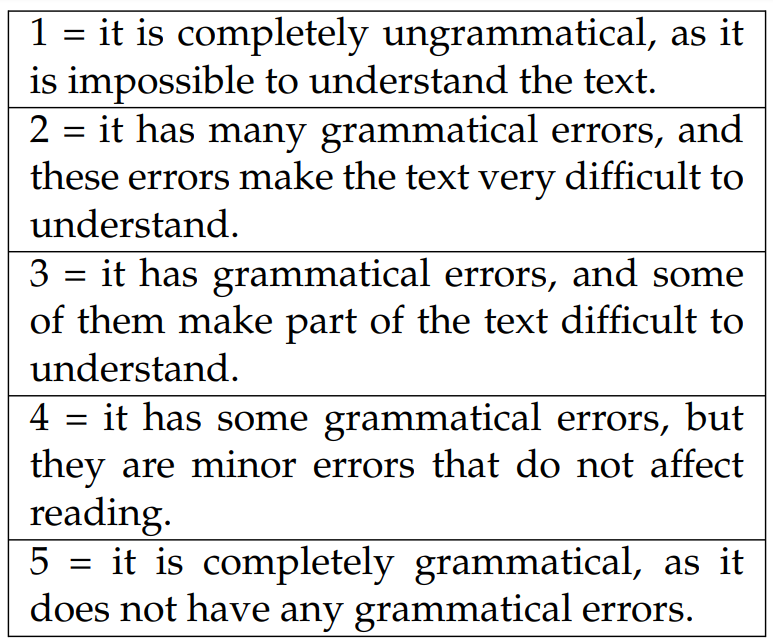

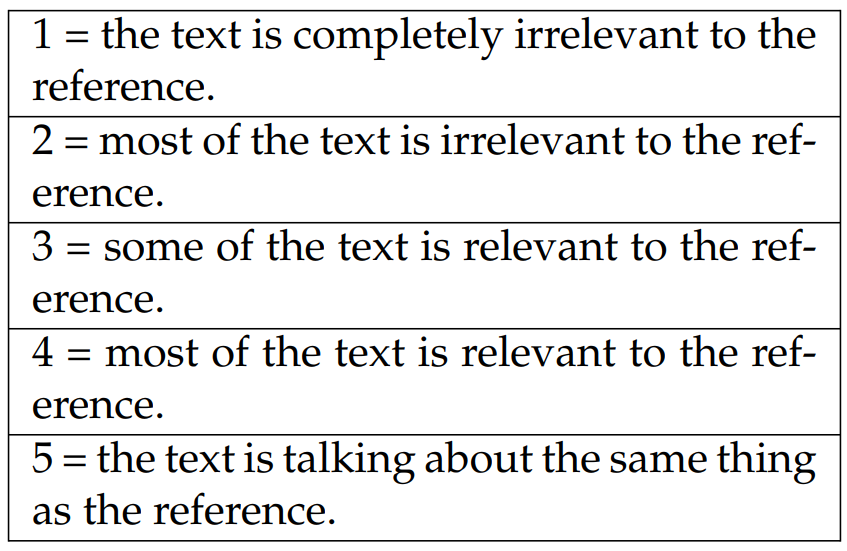

The selected topics for human evaluations are: human (excluding the introduction and biography section), film, single (song), song, album, television series. When evaluating grammaticality and coherence, only the generated text is shown to annotators. The question for grammaticality is “On a scale of 1-5, how much do you think the text is grammatical? (Note: repetitions are grammatical errors.)” (option explanations are shown in Table B.1), and the question for coherence is “On a scale of 1-5,

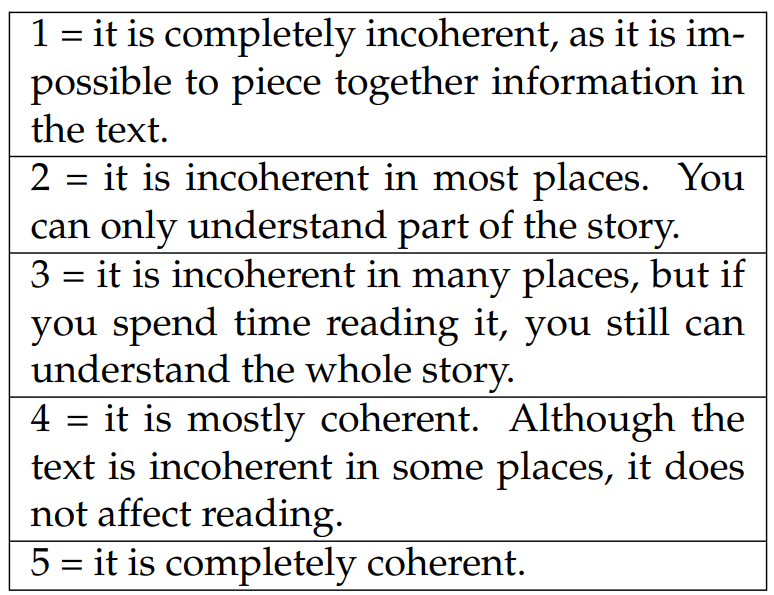

how much do you think the text is coherent? (Coherence: Does the text make sense internally, avoid self-contradiction, and use a logical ordering of information?)” (rating explanations are in Table B.2).

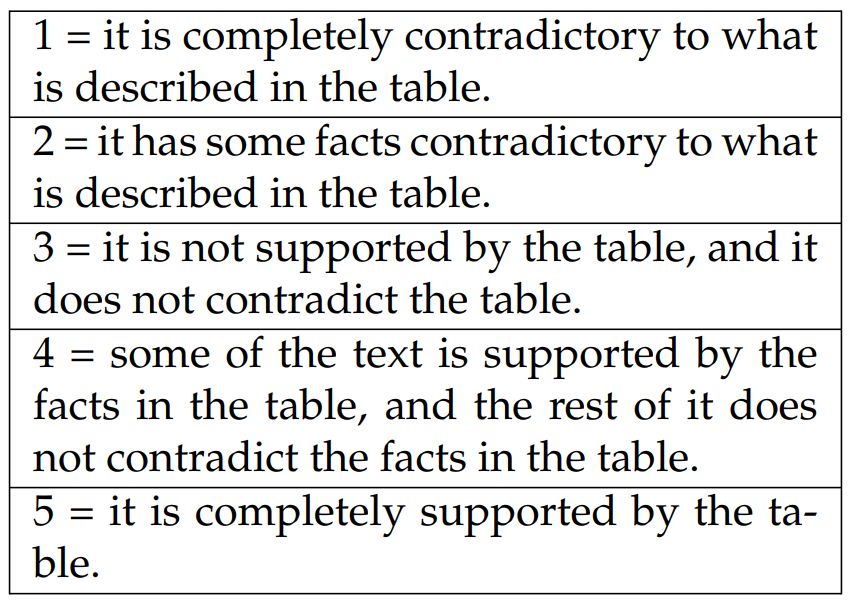

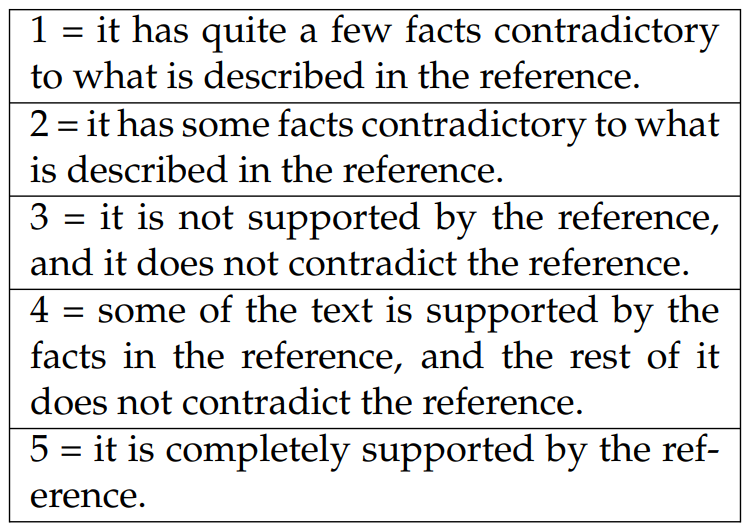

When evaluating faithfulness, we show annotators the article data and the generation. The question is “On a scale of 1-5, how much do you think the text is supported by the facts in the following table?” (rating explanations are in Table B.3).

When evaluating coherence and relevance, annotators were shown the reference text and the generation, as well as the Wikipedia article title and section titles for ease of understanding the texts. Annotators were asked two questions, with one being “On a scale of 1-5, how much do you think the text is relevant to the reference” (Table B.4), and the other being “On a scale of 1-5, how much do you think the text is supported by the facts in the reference?” (Table B.5).

B.2.1 Details about Dataset Construction for TVSTORYGEN

String Matching Algorithm. For example, for the character name “John Doe”, valid mentions are itself, “John”, “J.D.”, and “JD” due to the writing style on TVMegaSite. While this matching algorithm may lead to extra characters aligned to particular episodes, it at least includes all characters that are actually involved in the episode.

Episode Filtering Criteria. We filter out episodes if (1) an episode contains fewer than 3 characters (to avoid stories that do not involve many character interactions); or (2) the detailed recap has fewer than 200 word tokens (ensuring that stories have enough details); or (3) the brief summary has fewer than 20 word tokens (to ensure that there is sufficient information given as the input).

B.3 Details about Semantic Role Labeling for TVSTORYGEN

We use a pretrained model from Shi and Lin (2019) to generate SRL tags of the detailed episode recaps. We eliminate the SRL tags for sentences that do not contain <ARG0> or only contain pronouns to avoid ambiguity. For each sentence, we also only keep the SRL tags that correspond to the first verb that appears in the sentence to avoid the SRL tags being too specific, so there will be a balanced burden between the text-to-plot model and the plot-to-text model. In addition, following Goldfarb Tarrant et al. (2020), we discard SRL tags of generic verbs.

The list of verbs we discard is as follows: “is”, “was”, “were”, “are”, “be”, “’s”, “’re”, “’ll”, “can”, “could”, “must”, “may”, “have to”, “has to”, “had to”, “will”, “would”, “has”, “have”, “had”, “do”, “does”, “did”.

We also eliminate arguments that are longer than 5 tokens.

B.4 Details about Decoding Algorithms and Hyperparameters for TVSTORYGEN

Decoding For both story generation and summarization, we use a batch size of 200, beam search of size 5 with n-gram blocking (Paulus et al., 2018) where probabilities of repeated trigrams are set to 0 during beam search. We did not find nucleus sampling (Holtzman et al., 2020) leading to better generation quality (i.e., fluency and faithfulness to the summaries and the recaps) than beam search with n-gram blocking, possibly due to the fact that our models generate at the sentence level.

Hyperparamters Because our plot-to-text models work at the sentence level, leading to many training instances for both FD and TMS (i.e., 0.5 million and 1.5 million sentences respectively), we train these plot-to-text models for 10 epochs without early stopping. During generation, we set the minimum number of decoding steps to 24 and the maximum number of decoding steps to 48.

As for summarization, we benchmark pretrained BART-base, BART-large (Lewis et al., 2020b), and Pegasus (Zhang et al., 2020a). As the average length of the detailed recaps is much longer than the default maximum sequence length of these pretrained models, we extend the maximum sequence length to 4096. When doing so, we randomly initialize new positional embeddings for BART. Since Pegasus uses Sinusoidal positional embeddings, we simply change the default value of maximum sequence length. We train the models for 15 epochs and perform early stopping on the dev set perplexities. During generation, we limit the minimum decoding step to be 50 and 300, and the maximum decoding step to be 100 and 600 for FD and TMS respectively. The minimum decoding steps roughly match the average length of the summaries in FD and TMS.

This paper is available on arxiv under CC 4.0 license.

[1] List of filtered entity types: PERCENT, TIME, QUANTITY, ORDINAL, CARDINAL.

[2] Among millions of section titles in Wikipedia, there are only 4672 sections, including nested sections, that are called “Introduction”. Therefore, we believe this process will not introduce much noise into the dataset.