Authors:

(1) Muneera Bano;

(2) Didar Zowghi;

(3) Vincenzo Gervasi;

(4) Rifat Shams.

Table of Links

Abstract, Impact Statement, and Introduction

Defining Diversity and Inclusion in AI

Conclusion and Future Work and References

V.RESULTS

A. D&I in AI Themes

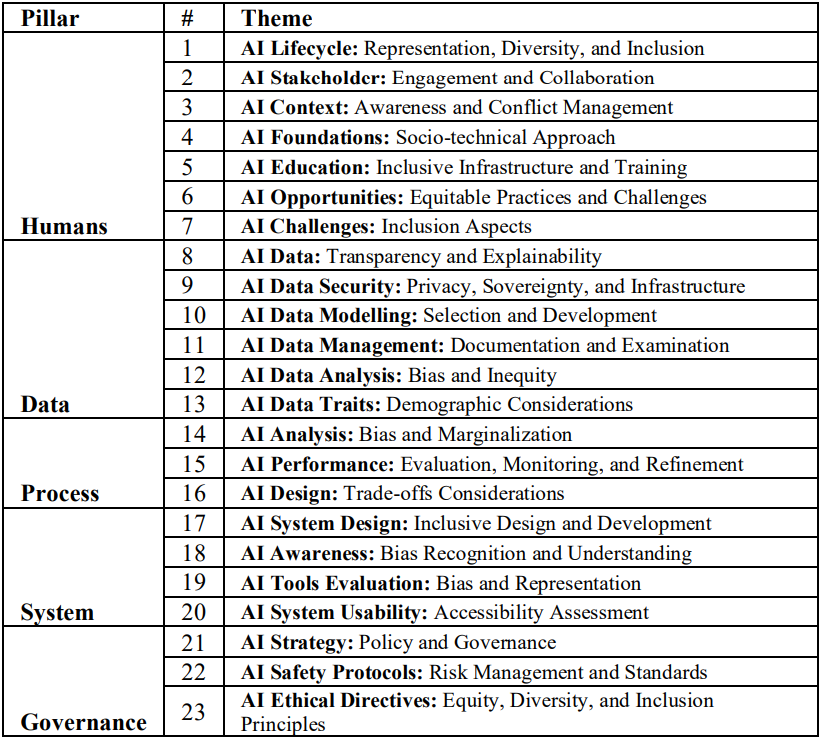

Table 1 provides a unique list of 23 themes extracted from our literature review. The themes are structured across five foundational pillars, showing the multifaceted dimensions of AI ecosystem.

The 'Humans' pillar fundamentally addresses the integration of diverse human perspectives in AI. Within this pillar, themes such as #1 highlight the imperatives of ensuring representation, diversity, and inclusion throughout the AI lifecycle. It suggests that AI applications should be reflective of the diverse human experiences they are designed to serve. Complementing this, #2 underscores the pivotal role of stakeholders. It promotes the tenet that AI's potential is best harnessed when there is an active engagement and collaboration amongst all stakeholders, ensuring collective wisdom and diverse viewpoints shape AI's trajectory.

Data serves as the bedrock upon which AI operates. Within this pillar, theme #8 champions the ethos of transparency and explainability, signifying that the logic and reasoning behind AI's decisions should be open for scrutiny and comprehension. Similarly, theme #9 is related to data security, underscoring the principles of user privacy, data sovereignty, and infrastructure. These themes advocate for a data ecosystem that is both transparent and secure, upholding both trust and reliability.

Within the Process pillar, theme #14 emphasising the importance of analyzing AI processes for inherent biases and potential marginalization. It reiterates that every step of AI's decision-making process should minimise unintended biases. Theme #15 relates to continuous refinement are instrumental to ensure AI's outputs are both effective and inclusive.

The System pillar relates to the various aspects of diversity and inclusion principles within design and development of AI systems. Theme #17 advocates placing inclusivity at the core of AI system development. This theme suggests that the architecture of AI systems should inherently cater to diverse user groups. Similarly, theme #18 highlights the importance of building AI systems with a clear awareness of biases, thereby reducing the chances of biased outcomes.

The Governance pillar provides overarching guidance for AI's ethical and operational conduct. Theme #21 highlights the need of a robust AI strategy, emphasizing the need for clarity in policy formulation and governance structures. This ensures that AI initiatives align with broader organizational or societal objectives. Complementing this, theme #22 focuses on the significance of safety protocols, underscoring the necessity for risk management and adherence to established standards, supporting the ethical principles within AI system development.

From our analysis, noteworthy insights emerge related to conflict identification and trade-off analysis. While analyzing diversity and inclusion in AI, balancing different themes can often necessitate conflict resolution. For example, consider theme #8 (AI Data: Transparency and Explainability) and theme #9 (AI Data Security: Privacy, Sovereignty, and Infrastructure). While transparency and explainability demand open and interpretable AIsystems, prioritizing data security and user privacy might require certain data operations to remain unchanged.

Similarly, within the 'Humans' pillar, theme #2 (AI Stakeholder: Engagement and Collaboration) emphasizes the importance of a collective approach involving diverse stakeholders in AI development. However, as theme #3 (AI Context: Awareness and Conflict Management) suggests, such extensive collaboration can occasionally lead to conflicting viewpoints, especially where stakeholders come from diverse cultural or societal understandings. Prioritizing one theme over the other might be detrimental, so a trade-off analysis can help in striking a balance.

Similarly, within the 'System' pillar, theme #17 (AI System Design: Inclusive Design and Development) and theme #20 (AI System Usability: Accessibility Assessment) reveals another potential area for trade-off analysis. While an inclusive design ensuresthat AIsystems are equitable and considerate of diverse users, an overemphasis on inclusivity might sometimes lead to overly complex systems. This complexity can impede the system's usability, especially for users who aren't technologically adept.

B. User Story Template

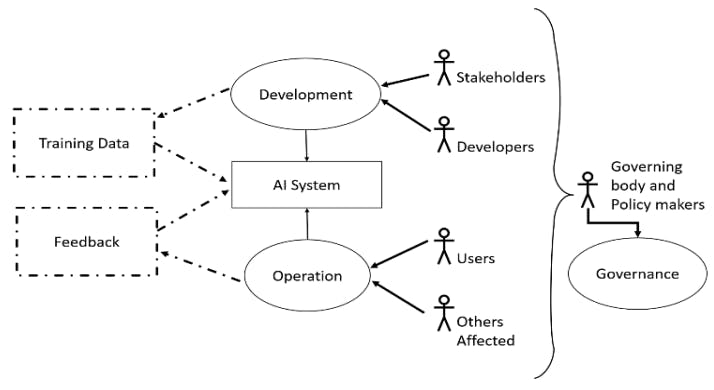

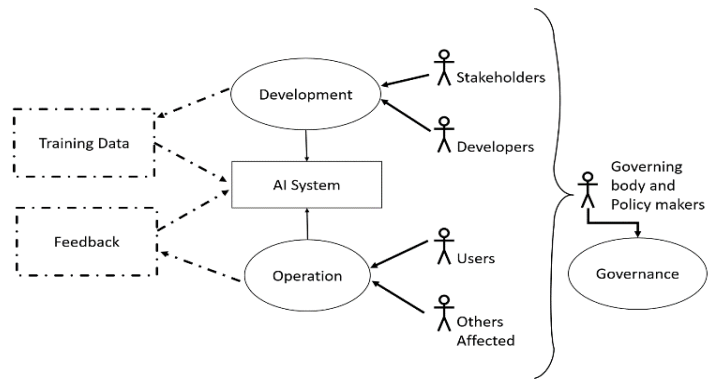

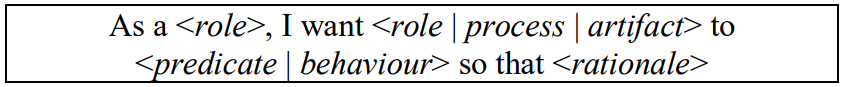

As we need D&I requirements to be managed together with other requirements for the system, rather than being stated in isolation, they need to be expressed in a form that is amenable to effective requirements management. User stories [29, 30] are a convenient format for our purposes, as well as being widely known and applied in practice. We propose a user story template specifically tailored to D&I in AI systems. To this end, we first map the five pillars discussed in Section III onto a model of roles, artifacts and processes as shown in Figure 3.

We consider three artifacts, namely the AI system (corresponding to the “System” pillar), the initial training datasets and ongoing feedback if any (corresponding to the “Data” pillar), two processes of Development and Operation of the AI system (corresponding to the “Process” pillar), and an additional process, external to the development project in a strict sense, of Governance (corresponding to the “Governance” pillar). We include the establishment of laws, company policies, industrial standards, as well as education of the public at large and management of social expectations as part of the Governance process.

Finally, the Humans pillar is structured according to a variety of roles participating in the various processes; we single out developers and other stakeholders involved in the development process, users of the AIsystem and others that are affected by the operations or even mere existence of the AI system; as well as governing body and policy makers. All these roles should be interpreted with an expansive meaning, and with the understanding that a specific development project may well use more or less roles (or different naming). Similarly, a specific project may use different and more specific names (possibly of multiple specific sub-components) instead of “AI system”, and similarly for the other elements in our depiction. The template we recommend to express D&I-related user stories is the following, with the specific rules listed below:

This format helps to identify the key stakeholders, the desired action or outcome, and the underlying motivation behind the request. By employing this user story template, the development team can better address the needs of diverse stakeholders and align their AI systems with the overarching goals of diversity and inclusion.

To qualify as a proper D&I requirement, certain conditions on user stories must be fulfilled. Firstly, at least one instance of role should be qualified by a protected attribute and a value for that attribute (thus identifying a particular group of persons), or alternatively, the predicate or behaviour should refer to a protected attribute. This constraint ensures that the requirement represents a specific group's perspective or applies to that group, and hence may relate to a D&I issue. For example, a qualified role could be "a non-binary user of the system" (here the attribute is “gender identity” with value “non-binary”) or "an Asian developer” (attribute “ethnicity” with value “Asian”). It must be stressed that the referenced group need not necessarily be a minority, underrepresented, or underprivileged group. The point of view of a majority group should also be listed among the requirements: inclusion is about including all points of view. An example of behavior that includes a protected attribute might be “provide audible feedback”, with the rationale “so that visually impaired users are included”.

The choice of which attributes and specific values are protected is often determined on a case-by-case basis by legal frameworks about non-discrimination, company policies, social expectations, or personal values.

Secondly, the role, process or artifact must be under the project owner's responsibility and control. This constraint ensures that only actionable guidelines are articulated as requirements. For instance, a small-scale AI project owner at a startup may not have the authority to dictate national government actions; thus, Theme 5 would not be amenable to be articulated as a requirement for their specific AI project. Another example is when developers have no control over the demographic makeup of anonymous feedback data, rendering a user story stating that feedback data must be demographically representative worthy, but not actionable. By adhering to these constraints, we may ensure that the generated user stories are indeed D&I requirements and can effectively guide the development of inclusive AI systems.

C. Focus Group Exercise

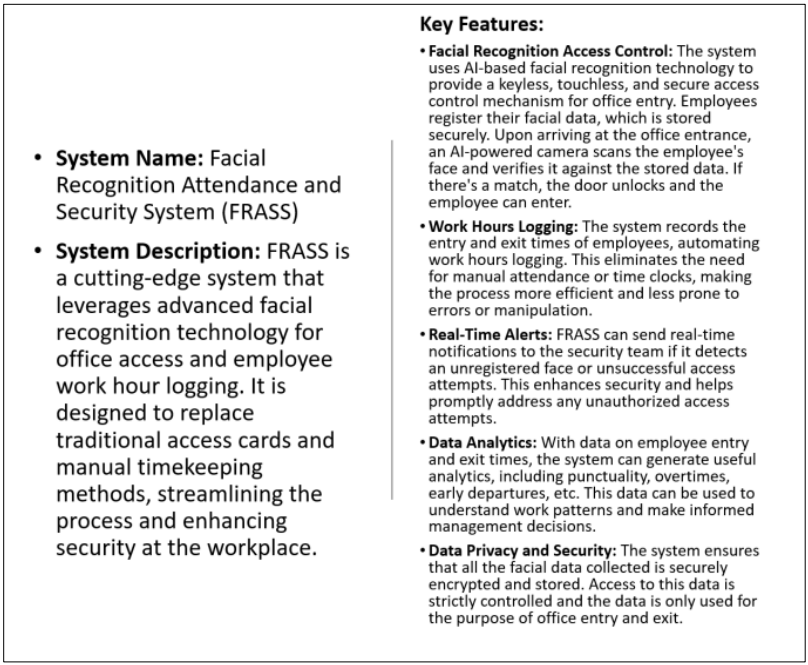

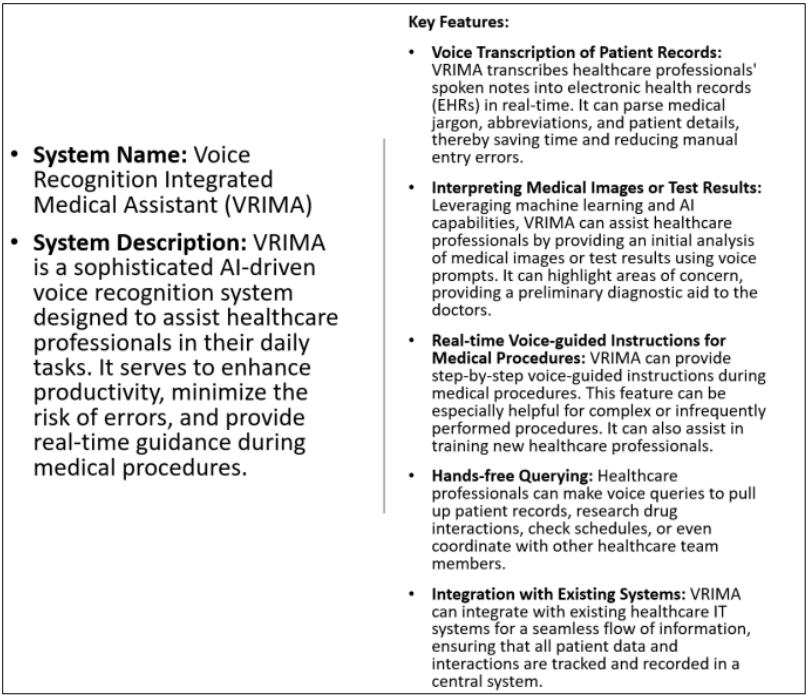

We conducted two focus group exercises with four experts who brought with them their diversity knowledge for gender, age, race, nationality, language, and religion. All of them are active researchers in the field of requirements engineering, ethical AI development, and diversity and inclusion. We presented them with two case studies: one on facial recognition and the other on voice recognition. In our effort to operationalize the D&I in AI themes, this approach allowed us to bring in practical scenarios, ensuring our guidelines were both theoretically sound and applicable to real-world AI challenges.

We recognised that engaging people with relevant diverse attributes and expertise for writing D&I in AI user stories is challenging and time-consuming. So, we decided to explore the utility of Large Language Models in generating user stories from the D&I in AI themes. After each focus group exercise, we provided the list of structured themes, user story template and example case studies, to GPT-4 and asked it to generate at least one user story per theme. We aimed to examine how closely the user stories from both human analysts and GPT-4 are aligned in terms of diversity attributes and covering the themes.

1)Case Study 1

In our first focus group, example case study 1 (Figure 4) was presented to four participants. The participants were provided with the themes and user story template and asked to write user stories.

Following is the sample of the D&I requirements specified by the focus group, along with the diversity attribute that it highlights and the Theme from our Table 1 to which it aligns:

• As a person with a visual impairment, I want FRASS to tell me the precise location where my face should be placed so that the Facial recognition system can scan my face properly for entry. [Disability, Theme 20]

• As an employee who smokes and has to take breaks for smoking outside throughout the day, I want the Work Hours Logging to recognise my needs to exit and re-enter the workplace for a smoking break. [Lifestyle, Theme 1]

• As a delivery person who has to deliver and move large boxes of equipment inside the building, I want the Real-time alert feature of the FRASS to recognise me as an authorised person to enter the premises. [Occupation, Theme 3]

• As a Muslim woman with niqab (covering face except eyes), I want FRASS to recognize me seeing my eyes only so that I don't have to open my face every time while accessing office. [Religion/Gender, Theme 1]

• As a person who wear glasses, I want FRASS to recognise my face accurately with it when scanning so that I don't have to remove it every time I enter or exit. [Physical Appearance, Theme 13] • As an Asian lady with a black face, I want the FRASS system to correctly identify my face despite my skin tone so that it won't cause me to miss work or have a negative impact on my professional image. [Race, Theme 14]

• As a Manager who has to use reports generated by FRASS to assess performance of my team, I want FRASS to allow me to generate reports about the details of the movement of my team during working hours. [Occupation, Theme 21]

• As a transgender person who does not want to disclose my gender attribute, I want FRASS to respect my privacy. [Gender, Theme 1]

• As a developer of FRASS I want facial recognition feature to be inclusive as much as possible so that everyone in the company will build confidence on the system. [Occupation, Theme 1]

• As a person working in fire brigade with heavy uniform, I want FRASS to recognize me properly and quickly so that I can quickly manage my work hour logging and don't become late in any emergency situation at my workplace. [Occupation, Theme 3]

• As a female with a half-burnt face, I want the FRASS system to recognise my half-non-burnt face so that I do not need to uncover the burned area on my entry and exit every time. [Physical Appearance, Theme 6]

• As the CEO I want FRASS to generate overall reports on off-site hours of my employees so that I can take informed decisions. [Occupation, Theme 2]

Here is the sample of the user stories written by GPT-4 for the example case study 1 and using the same themes and user story template.

• As an employee of African descent, I want FRASS's facial recognition process to accurately identify diverse facial features so that I am not misidentified or denied access. [Race, Theme 1, 12, 18] • As a female employee wearing a hijab, I want FRASS's facial recognition process to accurately recognize me even with my headgear on so that my religious beliefs do not hinder my ability to access my workplace. [Religion & Gender, Theme 1, 12, 18]

• As an employee with visual impairment, I want FRASS's access control mechanism to accommodate alternative identification methods so that I can enter the workplace without facing any barriers. [Disability, Theme 1, 20]

• As an elderly employee, I want FRASS's onboarding process to provide clear-instructions and easy-to-understand tutorials so that I can comfortably and confidently use the system. [Age, Theme 5, 20]

• As a transgender employee, I want FRASS's data analytics process to respect my gender identity and not make assumptions based on facial features so that I feel represented and respected. [Gender Identity, Theme 1, 12, 18]

• As an employee who recently underwent facial surgery, I want FRASS's facial recognition system to easily allow for re-registration of my facial data so that I don't face difficulties accessing the premises after my recovery. [Physical Appearance, Theme 10, 15]

• As a young Asian female employee, I want FRASS's feedback and improvement process to actively seek diverse perspectives, including mine so that the system continuously evolves to cater to a broad range of users. [Race & Gender & Age, Theme 1, 2, 15]

The D&I requirements captured by the focus group of human analysts highlight a broader set of diversity attributes, considering aspects such as lifestyle, occupation, and different physical appearances beyond facial features. For instance, the requirements address employees with specific needs like smoking breaks and those professions, such as fire brigade personnel or delivery persons. Comparatively, GPT-4's generated requirements primarily gravitated towards more commonly discussed diversity attributes such as race, gender, religion, age, physical appearance, and disability. While both sets touch upon religion, physical appearance, race, and gender, the human-specified requirements offer a richer variety in context and rationale. The overlap in terms of disability, race, gender identity, and religion suggest that both the human group and GPT-4 acknowledge these as significant considerations. However, the human-generated requirements seem to demonstrate a deeper understanding of nuanced everyday experiences and challenges faced by diverse individuals in a workplace setting.

2) Case Study 2

We conducted our second focus group exercise (with the same participants) this time with example case study 2 (figure 5). Following is the sample of the D&I requirements specified by the focus group:

• As a female health professional I want VRIMA to recognise my spoken words accurately and follow the instructions I give using my voice. [ Gender, Profession, Theme 17]

• As a covid positive doctor, I want VRIMA to recognize my voice even it is completely broken, so that I can conduct my regulartask. [Health condition, Profession, Theme 20]

• As a doctor with a strong Persian accent I want VRIMA to recognise my ethnicity and hence understand my accent when I give instructions. [ Ethnicity, Accent, Profession, Theme 13]

• As a female health professional with an Asian accent, I want VRIMA to correctly recognise my voice to generate the correct health results in the form of images. [Gender, Profession, Accent, Ethnicity, Theme 13]

• As a health professional with a stammer, I want VRIMA to recognize my instruction and follow them accurately, so that my stammer does not result in incorrect outcomes. [ Health condition, Profession, Theme 20]

• As a health professional, I need to visit different wards in hospital which is noisy. I want VRIMA to suppress the background noises when I talk, so that it does not misunderstand what I am asking it to do. [Profession, Environment, Theme 18]

• As a health worker with speech disability, I want VRIMA to be trained with my impairment so that it can understand my instructions and capture it accurately. [Health condition, Profession, Theme 20]

• As a soft spoken doctor, I want VRIMA to identify my commands, so that I don't want to put extra effort while using it during my day to day activities. [Personality trait, Profession, Theme 1]

• As a person with visual impairment who cannot read text on screen, I want VRIMA to read back to me what has been captured as my instructions so that I can verify it. [Disability, Theme 20]

• As a Mom of a 2 years old child and being a health professional, I want VRIMA to differentiate my baby's not meaningful words and my commands, so that it doesn't misunderstand my command. [Parenthood, Profession, Theme 4]

• As a doctor who treats Sri Lankan patients with long names, I want VRIMA to capture the full name of the patients with a couple of parts of the names I tell to it, so that I do not want to tell the whole name every time. [Profession, Ethnicity, Theme 13]

• As a health care manager with slow speech, I want VRIMA to recognise my voice commands accurately, govern the performance of the newly installed AI system at the clinic, and generate results in the form of reports. [Profession, Personality trait, Theme 21]

Here is the sample of the user stories written by GPT-4 for the example case study 2 and using the same themes and user story template.

• As a non-native English-speaking healthcare professional, I want VRIMA to accurately recognize and transcribe my accent and dialect so that my patient records are correctly documented without any misunderstandings or biases. [Language, Accent, Theme 13]

• As a healthcare professional attending to diverse patients, I want VRIMA to provide culturally appropriate voice-guided instructions during medical procedures so that patients feel respected and at ease. [Culture, Ethnicity, Theme 3]

• As a healthcare professional, I want VRIMA to recognize and address non-binary and transgender patients correctly, providing instructions or feedback that's sensitive to their gender identity so that the care I provide is inclusive and respectful. [Gender, Sex, Theme 7]

• As a healthcare professional with a hearing impairment, I want VRIMA to offer visual cues or transcriptions in addition to voice prompts so that I can effectively utilize the system during my procedures and tasks. [Disability, Theme 20]

• As a healthcare professional treating patients with diverse backgrounds, I want VRIMA to acknowledge and provide insights on diverse medical histories and practices (e.g., traditional medicines) so that I can offer a more holistic approach to patient care. [Culture, Ethnicity, Theme 1]

• As a healthcare professional, I want VRIMA to alert me about potential drug interactions or issues that may conflict with a patient's religious or cultural beliefs so that the treatment plan is respectful and in alignment with their beliefs. [Religion, Culture, Theme 1, 23]

In a comparative analysis of D&I requirements elicited from a focus group and those generated by GPT-4, several distinctions emerge. The focus group's user stories seem to be rooted in tangible experiences, emphasizing specific challenges such as voice recognition across various genders, accents, and health conditions affecting speech. Notably, their concerns also included environmental challenges like noisy hospital settings and the confluence of personal and professional demands. In contrast, GPT-4 provided a more encompassing perspective, including wider diversity themes such as cultural and religious sensitivities, and a broader representation of gender and sex diversity. While the focus group offered detailed, practical insights, GPT-4 presented a more generalized, but inclusively nuanced perspective. Together, both datasets cover a comprehensive understanding of the multifaceted D&I needs in healthcare settings.

This paper is available on arxiv under CC 4.0 license.