Authors:

(1) Gaurav Kolhatkar, SCTR’s Pune Institute of Computer Technology, Pune, India ([email protected]);

(2) Akshit Madan, SCTR’s Pune Institute of Computer Technology, Pune, India ([email protected]);

(3) Nidhi Kowtal, SCTR’s Pune Institute of Computer Technology, Pune, India ([email protected]);

(4) Satyajit Roy, SCTR’s Pune Institute of Computer Technology, Pune, India ([email protected]).

Table of Links

IV. PERFORMANCE ANALYSIS

We have used BLEU score to analyse the performance of our Machine Learning Models.

A. BLEU Score

A popular technique for assessing the quality of machine generated translations against a set of reference translations created by humans is called BLEU (Bilingual Evaluation Understudy) [?]. Its foundation is the comparison of the n-gram overlap between the machine translation and the reference translations.

The precision score, which assesses how many n-grams in the machine-generated translation also present in the reference translations, is first computed for each n-gram up to a specific maximum length (usually 4), before the BLEU score is determined. In order to discourage short and inadequate translations, the precision score is then adjusted by a brevity penalty factor that considers how long the machine-generated translation is in comparison to the reference translations. The final step is to integrate the updated accuracy scores into a geometric mean to create the overall BLEU score.

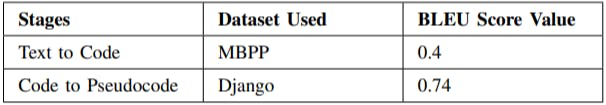

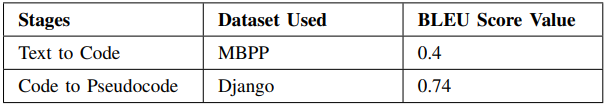

The BLEU score values obtained in our study, as shown in Table, were 0.4 for Text to Code and 0.74 for Code to Pseudocode. The BLEU score for the first stage of the project denotes understandable to good translations, which is evident from the syntactical correctness of the generated Python code. However, there may be logical errors in the Python code due to insufficient training data. The BLEU score for the second stage points to high quality translations, with the generated pseudocode exhibiting a high level of logical and structural correctness. This clearly shows that, given sufficient training data, this methodology promises to give excellent results for the Text to Pseudocode conversion task.

This paper is available on arxiv under CC 4.0 license.