This paper is available on Arxiv under CC 4.0 license.

Authors:

(1) Seth P. Benson, Carnegie Mellon University (e-mail: spbenson@andrew.cmu.edu);

(2) Iain J. Cruickshank, United States Military Academy (e-mail: iain.cruickshank@westpoint.edu)

Table of Links

IV. RESULTS

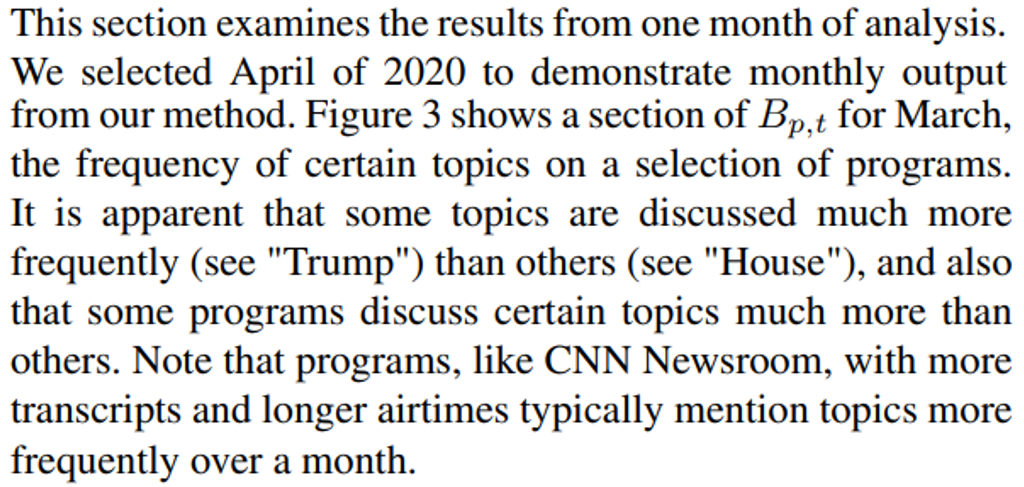

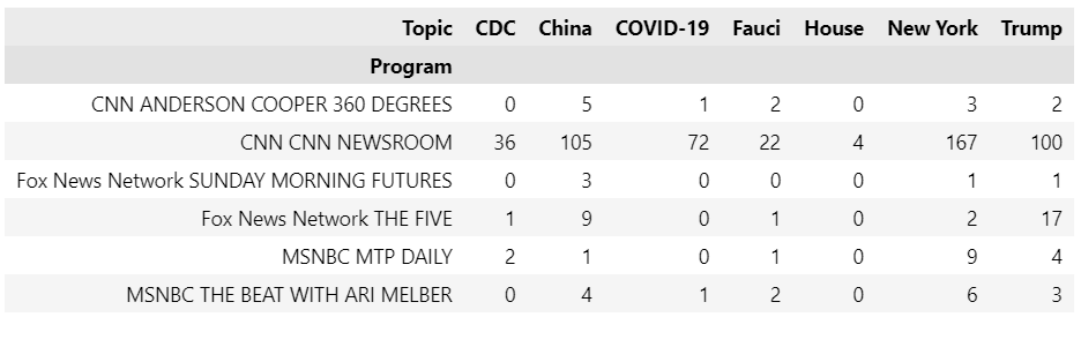

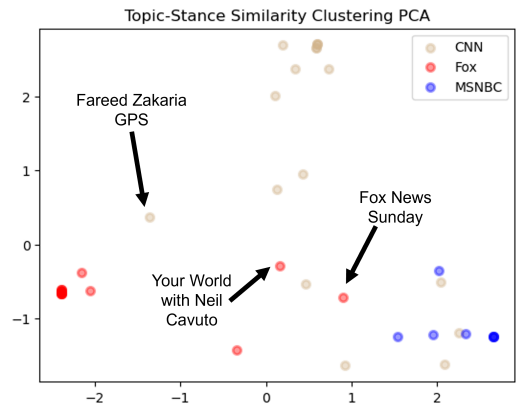

A. MONTHLY PROGRAM AGGREGATION

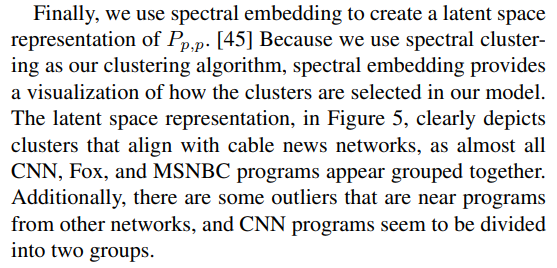

Looking at the monthly level we also observe that the program clusters generally follow the networks. Table 1, which depicts the Adjusted Rand Index between clustering assignments and network, shows that this is the case for most months. Other than in January, each month has an Adjusted Rand Index greater than 0.35, indicating an association between a program’s network and its clustering assignment in our model.

B. FULL YEAR RESULTS

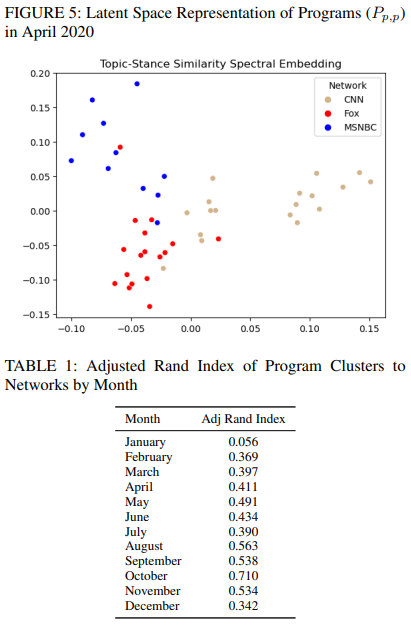

Our next results examine clusters across the year 2020. Figure 6 contains a Sankey diagram of all clustering assignments of programs across months. In this diagram, each individual line on the verticle axis represents a cluster, while moving from right to left represents going from January to December 2020. Two things are apparent.

First, the clusters are consistent over time. Although there are a few periods of major movement between months (especially in the first two months), most months see very few programs change clusters. Between March and December, there are rarely more than a couple of transcripts changing clusters at a time. The programs that do frequently move tend to be those typically less thought of as partisan, such as "Fox News Sunday".

Like the single months’ results, we also see over time that the clustering assignments are strongly tied to networks. With a few exceptions, almost all of the red Fox programs are in the same cluster throughout the year. Similarly, the blue MSNBC programs are largely assigned to the same cluster over time. The tan CNN programs are also mostly together, although several cross over to the MSNBC-dominated middle cluster at different points of the year.

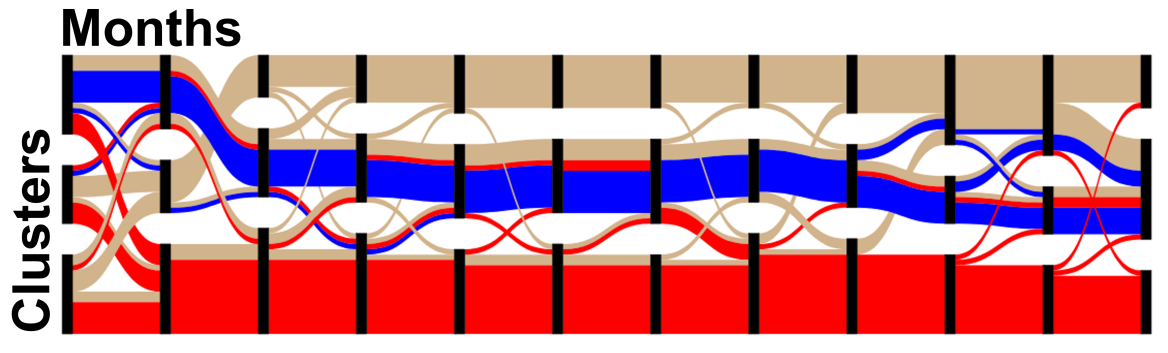

The Sankey diagram shows programs grouped by cluster and network, but we also want to determine how individual programs compare to each other in their clustering assignments. Using Principle Component Analysis (PCA), we reduce the clustering assignments from each month into two dimensions.

Like the Sankey diagram, the PCA plot, Figure 7, reveals that programs are largely grouped together by network. Most of the Fox programs are tightly grouped together, with a few exceptions like Fox News Sunday placed closer to CNN and MSNBC programs. Opposite the Fox programs on the plot, MSNBC programs are also closely placed. Interestingly, CNN programs are more spread out, with some programs intermingled with the group of MSNBC programs while others are placed away from both the Fox and MSNBC groups.

However, having many CNN and MSNBC programs placed closely together aligns with the conventional belief that CNN and MSNBC are similar in ideology and lean in the opposite direction of Fox.

C. EFFECTIVENESS SENTIMENT VERSUS STANCE IN BIAS DETERMINATION

As mentioned in the results section, using sentiment similarity to measure how programs talk about topics failed to produce meaningful variation between programs. On the other hand, stance similarity produced a much greater distinction between programs. One way to measure this is through the similarity matrix standard deviation. Given that some cable news programs are much closer to each other than others in their views on key topics and very different from others, we would expect there to be clusters of high similarity and large regions of low similarity.

On the other hand, if a similarity matrix has values that are small in magnitude and close together, it would indicate that the measure of similarity is unable to distinguish between programs. Thus, having a higher similarity matrix standard deviation indicates that a model is better at capturing real-world conditions.

Sentiment similarity resulted in a standard deviation (0.05 on average) that was only a third as large as the topic similarity standard deviation (0.15 on average), indicating that it captured much less variation between programs. Meanwhile, using stance resulted in a standard deviation (0.16 on average) roughly equal to the topic similarity standard deviation.

Another way to compare performance between the methods is to look at the variance of programs’ sentiment and stance towards specific topics. 8 shows the distribution of program sentiment and stance towards "Trump" and "Democrats" in April 2020. Because these are highly partisan terms, we would expect the networks to differ in their coverage of them.

We can see that this is not the case for sentiment, as programs from all three networks are tightly grouped together around the same average sentiment values. Comparatively, stance does a much better job of both providing variance between programs and sorting by network. The distribution for both terms covers a much wider numerical range than the sentiment results and there appears to be some sorting by the network, with CNN and MSNBC transcripts being generally more negative than Fox towards "Trump" and more positive than Fox towards "Democrats".

While the variance and network sorting produced through stance might not completely match expectations, they are much more meaningful in separating out bias-driven opinions toward entities than the sentiment results.

These results suggest that local sentiment does not properly identify differences in how topics are discussed on cable news. Sentiment’s weakness might be because different programs discussing the same topic are directing similar sentiments in opposing directions.

For example, the phrases "the investigation into President Trump is fraudulent" and "the investigation reveals President Trump’s failures" have similar sentiments but frame the same figure very differently. Consequently, our results also reveal the relative strength of stance analysis and suggest that it is the better approach for analyzing political text.

This paper is available on Arxiv under CC 4.0 license.