Authors:

(1) Yinwei Dai, Princeton University (Equal contributions);

(2) Rui Pan, Princeton University (Equal contributions);

(3) Anand Iyer, Georgia Institute of Technology;

(4) Ravi Netravali, Georgia Institute of Technology.

Table of Links

2 Background and Motivation and 2.1 Model Serving Platforms

3.1 Preparing Models with Early Exits

3.2 Accuracy-Aware Threshold Tuning

3.3 Latency-Focused Ramp Adjustments

5 Evaluation and 5.1 Methodology

5.3 Comparison with Existing EE Strategies

7 Conclusion, References, Appendix

6 ADDITIONAL RELATED WORK

A number of model-serving systems have been proposed [4, 5,7,17,22,39,44,49] where the focus is on serving large volumes of inference requests within a pre-defined SLO. Existing systems favor maximizing the system throughput while adhering to the latency constraints (2) by the use of intelligent placement [22,49], batching [4] and routing [44]. To the best of our knowledge, no existing serving proposals focus on alleviating the latency-throughput tension.

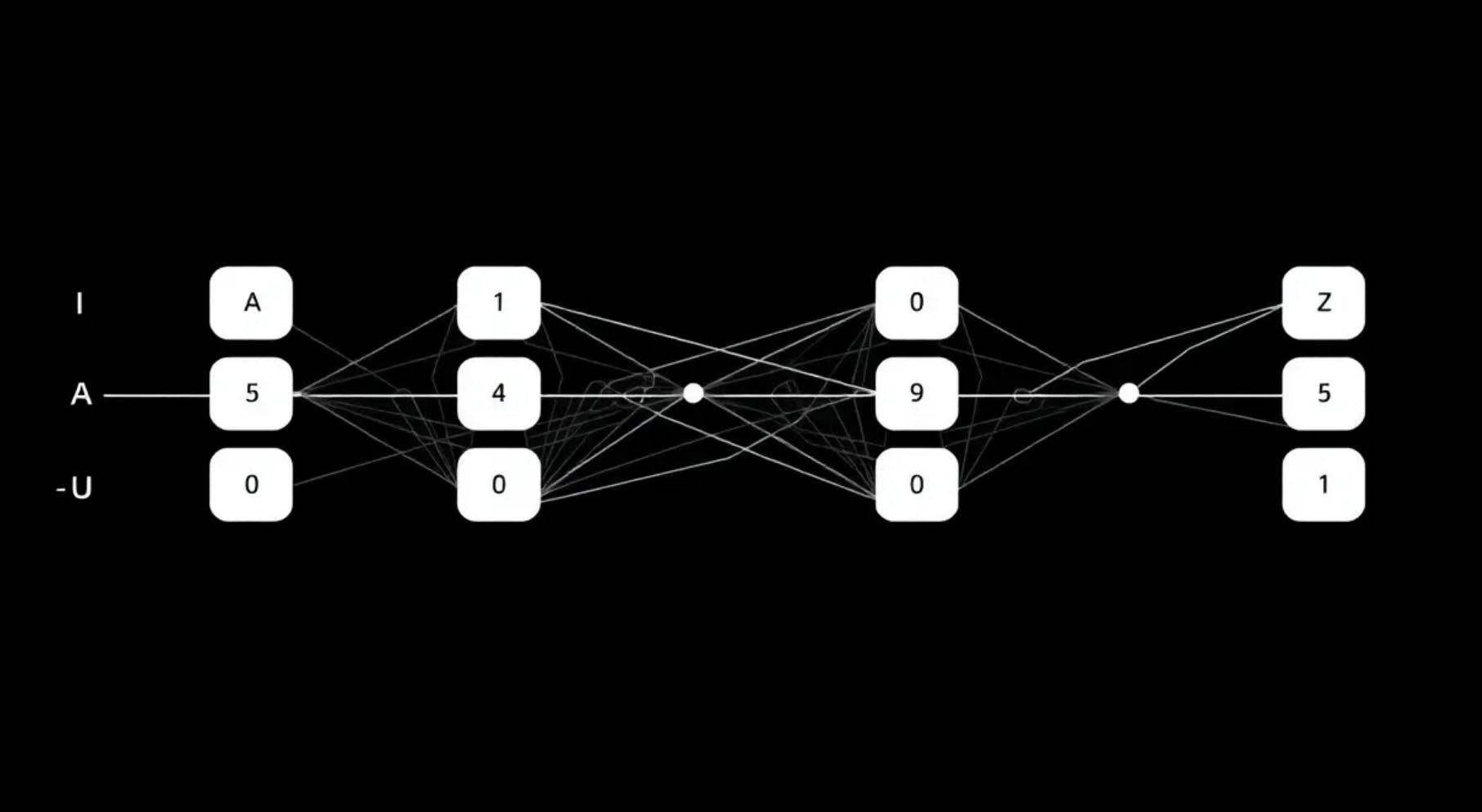

The ML community has been actively working on early-exit networks, with several proposals focusing on the EE’s ramp architecture and exit strategy [28,36,46,53,57,58,64]. The architecture of the ramp depends on the domain, but it typically consists of one or more layers that provide information necessary to make an exit decision by emulating the original model. Replicating the last (few) layers is the common [57, 58], Apparate builds on this approach and prefers shallow ramps in its workflow (3). Once a ramp architecture is chosen, the exit strategy could be based on confidence of the labels [36] or entropy of the prediction [57]. More sophisticated approaches exist, e.g., instead of considering the ramps as fully independent, [64] uses counter-based exiting. Apparate’s focus is on leveraging EEs to resolve the latencythroughput tension in serving systems with a design that generalizes to a large class of EE architectures.

Optimizing model serving objectives based on workload characteristics has been discussed in recent works [15, 16, 21, 44, 49, 62, 63]. Inferline [16] optimizes cost in serving pipelines of models while adhering to strict latency constraints using intelligent provisioning and management. Shepherd [63] maximizes goodput and resource utilization in highly unpredictable workloads. Despite their impressive results, these works still optimize their metric of choice at the expense of latency and do not resolve the latency-throughput tension, which is the focus of our (complementary) work.

A recent line of work has focused on creating variants of an ML model to optimize serving performance. Some of these works look at execution graph level optimizations such as quantization and fusing to reduce inference latency [1, 3], while others replace the model with an equivalent one that meets the provided constraints. Solutions like Mystify [23] and INFaaS [44] generates and chooses model variants based on their intent and constraints (including performance). As shown in §5.2, Apparate’s wins persist even on compressed models, and is thus complementary to these works in that it can operate on their outputs. Finally, optimizing the execution of dynamic neural networks that alter NN execution (e.g., EEs, mixture of experts) was proposed in [18, 61]. These are low-level optimizations (e.g., at the GPU) which can benefit Apparate and improve its performance.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.