Authors:

(1) Yinwei Dai, Princeton University (Equal contributions);

(2) Rui Pan, Princeton University (Equal contributions);

(3) Anand Iyer, Georgia Institute of Technology;

(4) Ravi Netravali, Georgia Institute of Technology.

Table of Links

2 Background and Motivation and 2.1 Model Serving Platforms

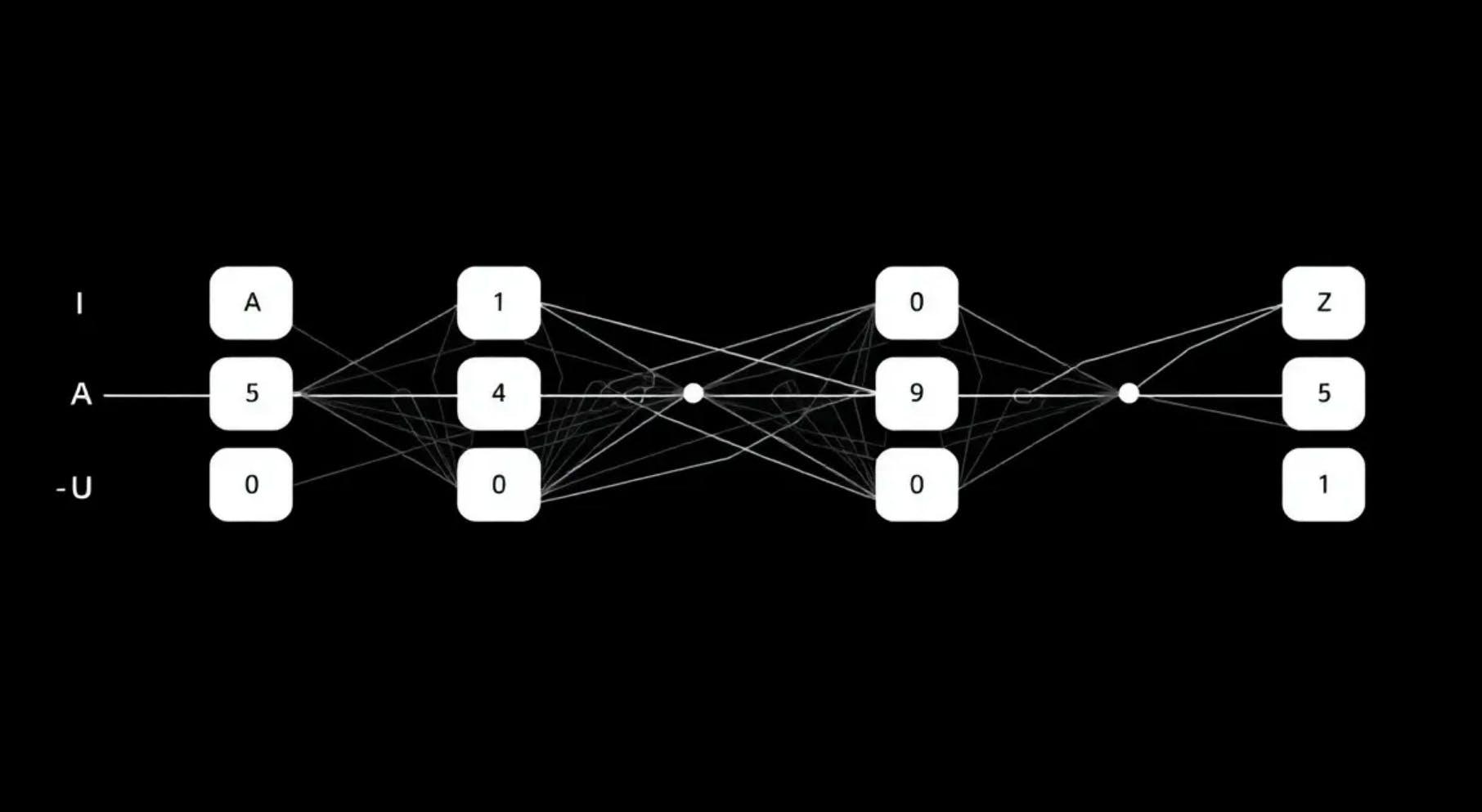

3.1 Preparing Models with Early Exits

3.2 Accuracy-Aware Threshold Tuning

3.3 Latency-Focused Ramp Adjustments

5 Evaluation and 5.1 Methodology

5.3 Comparison with Existing EE Strategies

7 Conclusion, References, Appendix

4 IMPLEMENTATION

Apparate is implemented as a layer atop TensorFlowServing [39] and Clockwork [22] (using PyTorch [7]) and includes the components described in 3 written as Python modules in ∼7500 lines of code. Although we chose these platforms for our current implementation, we note that Apparate is not limited to them and its techniques can be implemented in any inference platform. Importantly, Apparate entirely leverages the scheduling and queuing mechanisms of the underlying framework. Original models are ingested in the ONNX format [6] and compiled for performance. Ramp training (during bootstrapping) uses the first 10% of each dataset following a 1:9 split for training and validation; the remaining 90% of each dataset is used for evaluation.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.