This paper is available on arxiv under CC 4.0 license.

Authors:

(1) Zhe Liu, State Key Laboratory of Intelligent Game, Beijing, China Institute of Software Chinese Academy of Sciences, Beijing, China; University of Chinese Academy of Sciences, Beijing, China;

(2) Chunyang Chen, Monash University, Melbourne, Australia;

(3) Junjie Wang, State Key Laboratory of Intelligent Game, Beijing, China Institute of Software Chinese Academy of Sciences, Beijing, China; University of Chinese Academy of Sciences, Beijing, China & Corresponding author;

(4) Mengzhuo Chen, State Key Laboratory of Intelligent Game, Beijing, China Institute of Software Chinese Academy of Sciences, Beijing, China; University of Chinese Academy of Sciences, Beijing, China;

(5) Boyu Wu, State Key Laboratory of Intelligent Game, Beijing, China Institute of Software Chinese Academy of Sciences, Beijing, China; University of Chinese Academy of Sciences, Beijing, China;

(6) Zhilin Tian, State Key Laboratory of Intelligent Game, Beijing, China Institute of Software Chinese Academy of Sciences, Beijing, China; University of Chinese Academy of Sciences, Beijing, China;

(7) Yuekai Huang, State Key Laboratory of Intelligent Game, Beijing, China Institute of Software Chinese Academy of Sciences, Beijing, China; University of Chinese Academy of Sciences, Beijing, China;

(8) Jun Hu, State Key Laboratory of Intelligent Game, Beijing, China Institute of Software Chinese Academy of Sciences, Beijing, China; University of Chinese Academy of Sciences, Beijing, China;

(9) Qing Wang, State Key Laboratory of Intelligent Game, Beijing, China Institute of Software Chinese Academy of Sciences, Beijing, China; University of Chinese Academy of Sciences, Beijing, China & Corresponding author.

Table of Links

Motivational Study and Background

Discussion and Threats to Validity

2 MOTIVATIONAL STUDY AND BACKGROUND

To better understand the constraints of text inputs in real-world mobile apps, we carry out a pilot study to examine their prevalence. We also categorize the constraints, to facilitate understanding and the design of our approach for generating unusual inputs violating the constraints.

2.1 Motivational Study

2.1.1 Data Collection. The dataset is collected from one of the largest Android GUI datasets Rico [19], which has a great number of Android GUI screenshots and their corresponding view hierarchy files [45, 46]. These apps belong to diversified categories such as news, entertainment, medical, etc. We analyze the view hierarchy file according to the package name and extract the GUI page belonging to the same app. A total of 7,136 apps with each having more than 3 GUI pages are extracted. For these apps, we first randomly select 136 apps with 506 GUI pages and check their text inputs through view hierarchy files. We summarize a set of keywords that indicate the apps have text inputs widgets [30], e.g., EditText, hint-text, AutoCompleteTextView, etc. We then use these keywords to automatically filter the view hierarchy files from the remaining 7,000 apps, and obtain 5,761 candidate apps with at least one potential text input widget. Four authors then manually check them to ensure that they have text inputs until a consensus is reached. In this way, we finally obtain 5,013 (70.2%) apps with at least one text input widget, and there are 3,723 (52.2%) apps having two or more text input widgets. Please note that there is no overlap with the evaluation dataset.

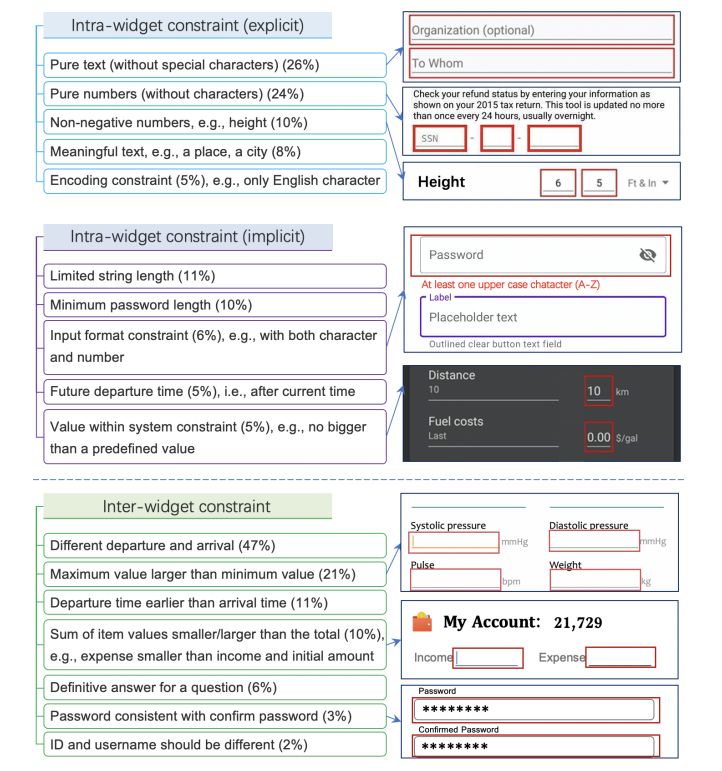

2.1.2 The Constraint Categories of Text Inputs. We randomly select 2000 apps with text inputs and conduct manual categorization to derive the constraint types of input widgets. Following the open coding protocol [59], two authors individually examine the content of the text input, including the app name, activity name, input type and input content. Then each annotator iteratively merges similar codes, and any disagreement of the categorization will be handed over to the third experienced researcher for double checking. Finally, we come out with a categorization of the constraints within (intra-widget) and among the widgets (inter-widget), with details summarized in Figure 2.

Intra-widget constraint. Intra-widget constraints depict the requirements of a single text input, e.g., a widget for a human’s height can only input the non-negative number. There are explicit and implicit sub-types. The former accounts for 63%, which manifests as the requirement to display input directly on the GUI page. And the latter account for 37%, mainly manifested as the feedback when incorrect text input is received, e.g., after inputting a simple password, the app would remind the user “at least one upper case character (A-Z) is required” as demonstrated in Figure 2.

Inter-widget constraint. Inter-widget constraints depict the requirements among multiple text input widgets on a GUI page, for example, the diastolic pressure should be less than systolic pressure as shown in Figure 2.

Summary. As demonstrated above, the text input widgets are quite common in mobile apps, e.g., 70.2% apps with at least one such widget. Furthermore, considering the diversity of inputs and contexts, it would require significant efforts to manually build a complete set of mutation rules to fully test an input widget, and the automated technique is highly demanded. This confirms the popularity of text inputs in mobile apps and the complexity of it for full testing, which motivates us to automatically generate a batch of unusual text inputs for effective testing and bug detection.

2.2 Background of LLM and In-context Learning

The target of this work is to generate the input text, and the Large Language Model (LLM) trained on ultra-large-scale corpus can understand the input prompts (sentences with prepending instructions or a few examples) and generate reasonable text. When pre-trained on billions of samples from the Internet, recent LLMs (like ChatGPT [58], GPT-3 [10] and T5 [56]) encode enough information to support many natural language processing tasks [47, 60, 68].

Tuning a large pre-trained model can be expensive and impractical for researchers, especially when limited fine-tuned data is available for certain tasks. In-context Learning (ICL) [11, 25, 51] offers a new alternative that uses Large Language Models to perform downstream tasks without requiring parameter updates. It leverages input-output demonstration in the prompt to help the model learn the semantics of the task. This new paradigm has achieved impressive results in various tasks, including code generation and assertion generation.